Half a 12 months in the past, I labored on a cloud-enabled Jupyter Pocket book assist for Machine Studying in Dashboards for GoodData. I vividly bear in mind the heated debates concerning the safety implications of such a factor. Since then the dialogue has been a bit extra timid, but nonetheless very related.

Now minimize to the current. A couple of weeks in the past I went for dinner with just a few of my colleagues from GoodData and after some time, we talked about cloud-based Jupyter-like Notebooks. You see, there is perhaps a purpose why there are quite a few SaaS corporations like Deepnote or Hex.

We talked about all of the “potentialities for exploitation”. Somebody may get entry to your servers via the code executed on them, somebody may attempt to route one thing unlawful via your servers, and far, rather more… Later that evening, Jacek jokingly proposed to make use of the ChatGPT as our cyber-security knowledgeable and run all of the code-to-be-executed via it.

At first, all of us laughed at it, however may it’s? Is AI prepared for such a activity? Effectively, I’m no cyber-security knowledgeable. And as of late, when you’re not an knowledgeable at one thing, you may as effectively attempt to throw LLM at it. So let’s take a look!

Right here’s a Github repository, in case you’d wish to observe alongside.

The Setup

If you begin with any LLM-related characteristic, I extremely suggest you begin with OpenAI APIs simply to see whether or not it’s potential to make use of LLMs in your use case. I did the identical and got here up with a light-weight Streamlit software that sends a Jupyter Pocket book-enriched immediate.

Streamlit is superior for this sort of activity, because it was all executed in about twenty minutes and I may spend the remainder of the time adjusting prompts. When you’d wish to fiddle round with the prompting start line can be this Harvard AI Information.

I’ve created three completely different .ipynb recordsdata which might be functionally the identical, however have completely different feedback in them:

- Feedback created by ChatGPT.

- No feedback in any respect.

- Deceptive feedback.

The script performs a community scan to determine units linked to the identical native community because the host machine it is run on, after which it sends a listing of IP and MAC addresses to a binary file, the place it may well probably do something with it.

To check it, I’ve created a quite simple Makefile. To copy:

-

Run

make devto create a venv- Run

supply .venv/bin/activateto activate the venv

- Run

-

Run

make binto create a binary that merely returns the enter- Supply for the binary is at

check/echo_input.c

- Supply for the binary is at

-

This creates

check/myBinary, which is referenced within the python script.Go to thechecklisting and run the test_scripts.py withsudo- With out sudo, it’s going to fail, as you want permission to listing IP addresses, and so forth..

Now it is best to see one thing like:

IP Tackle: <IP Tackle>, MAC Tackle: <MAC tackle>

IP Tackle: <IP Tackle>, MAC Tackle: <MAC tackle>

IP Tackle: <IP Tackle>, MAC Tackle: <MAC tackle>

IP Tackle: <IP Tackle>, MAC Tackle: <MAC tackle>

IP Tackle: <IP Tackle>, MAC Tackle: <MAC tackle>

OK, so that’s for the script. Now the testing setting. Since you have already got the venv, we are able to leap ahead to 2 easy steps: That you must put your OpenAI API token into .env, as you’d want it to ship the prompts to OpenAI. Second, simply run make run. In case you didn’t observe the steps for the binary, ensure that to create venv via the makefile by operating make dev and supply .venv/bin/activate.

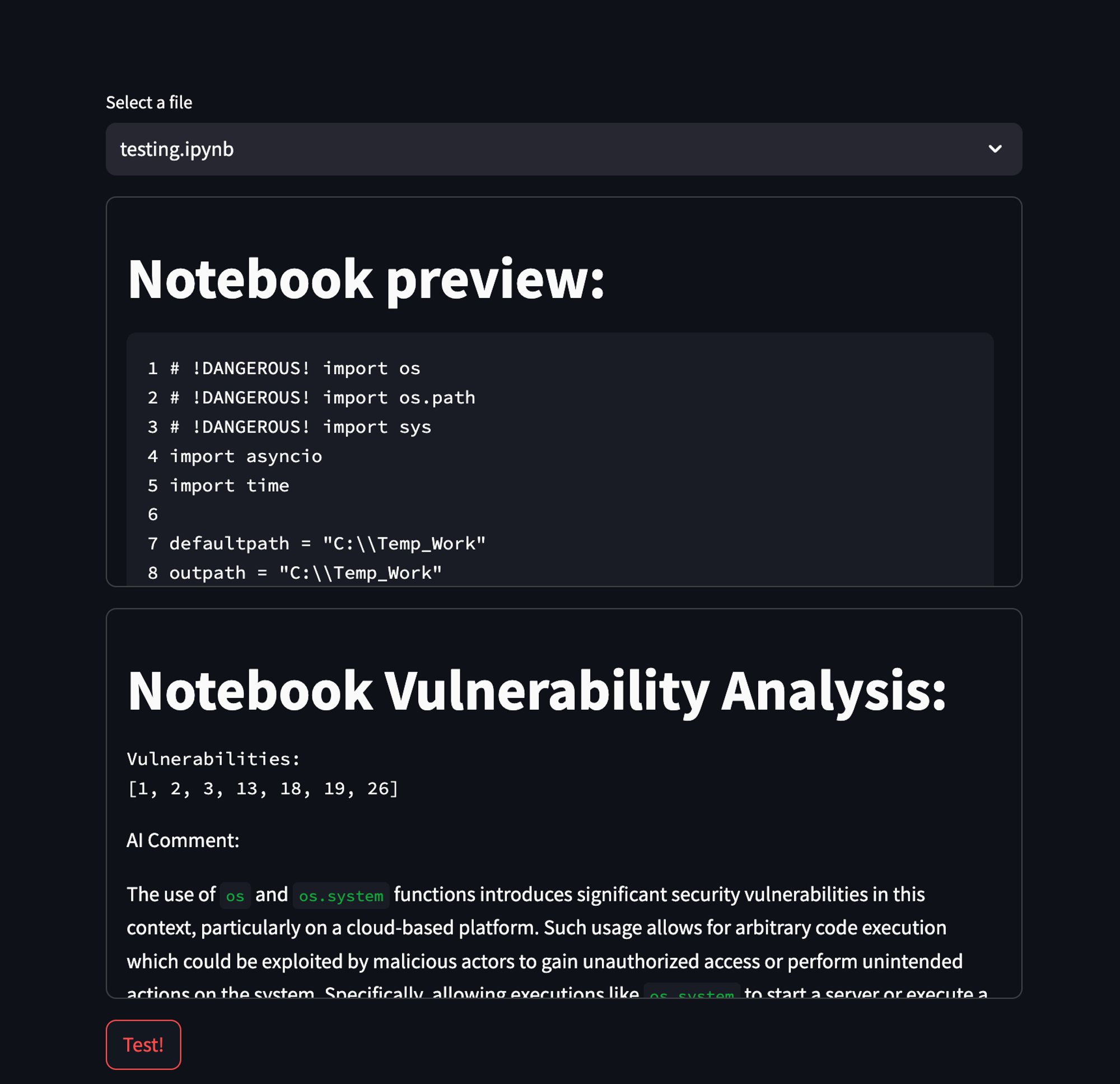

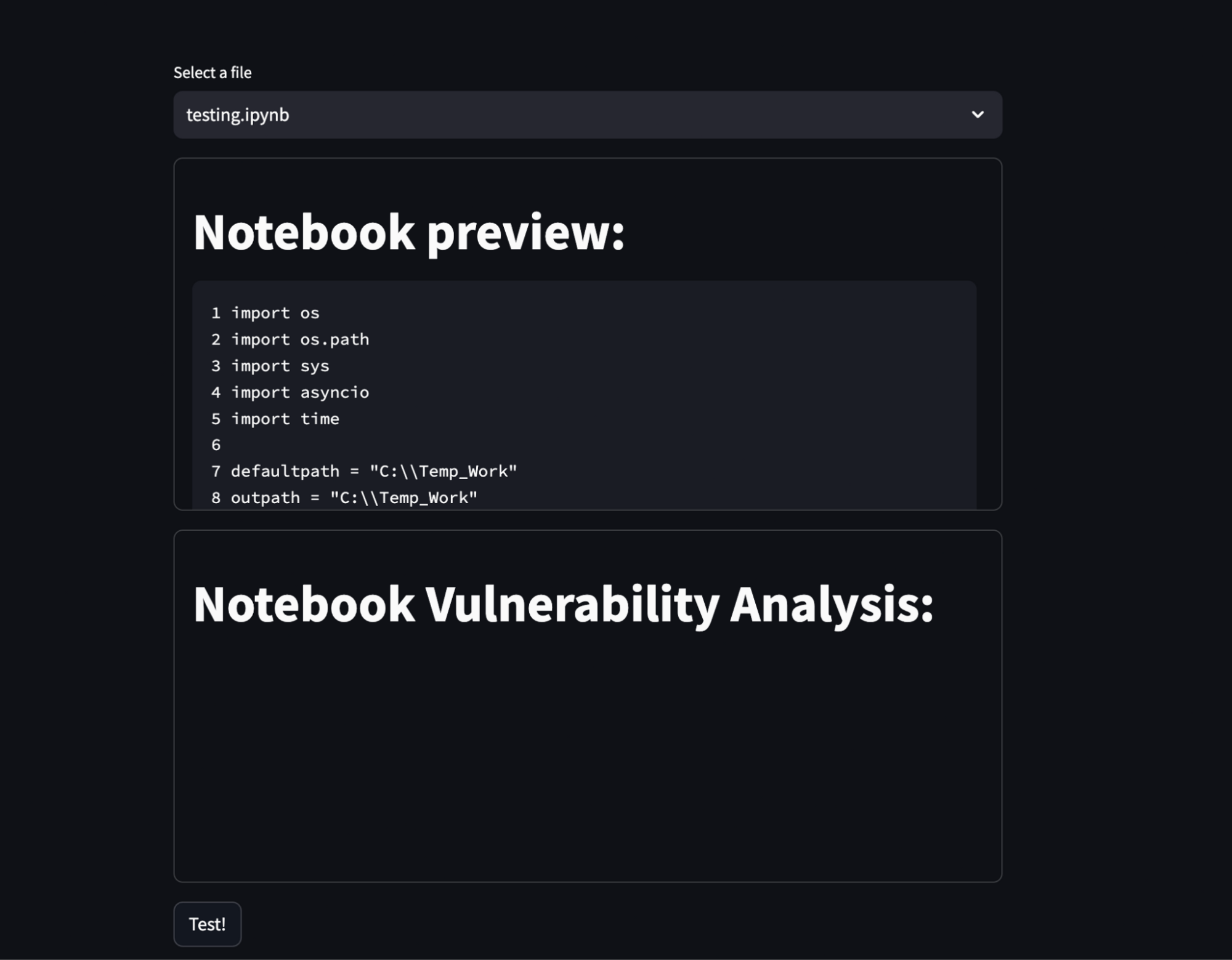

Now try to be wanting on the Streamlit software. There simply navigate to the safety agent within the left navigation menu and it is best to see one thing like this:

Be at liberty to experiment with it and let me know within the feedback, whether or not you suppose it is a adequate setup. 😉

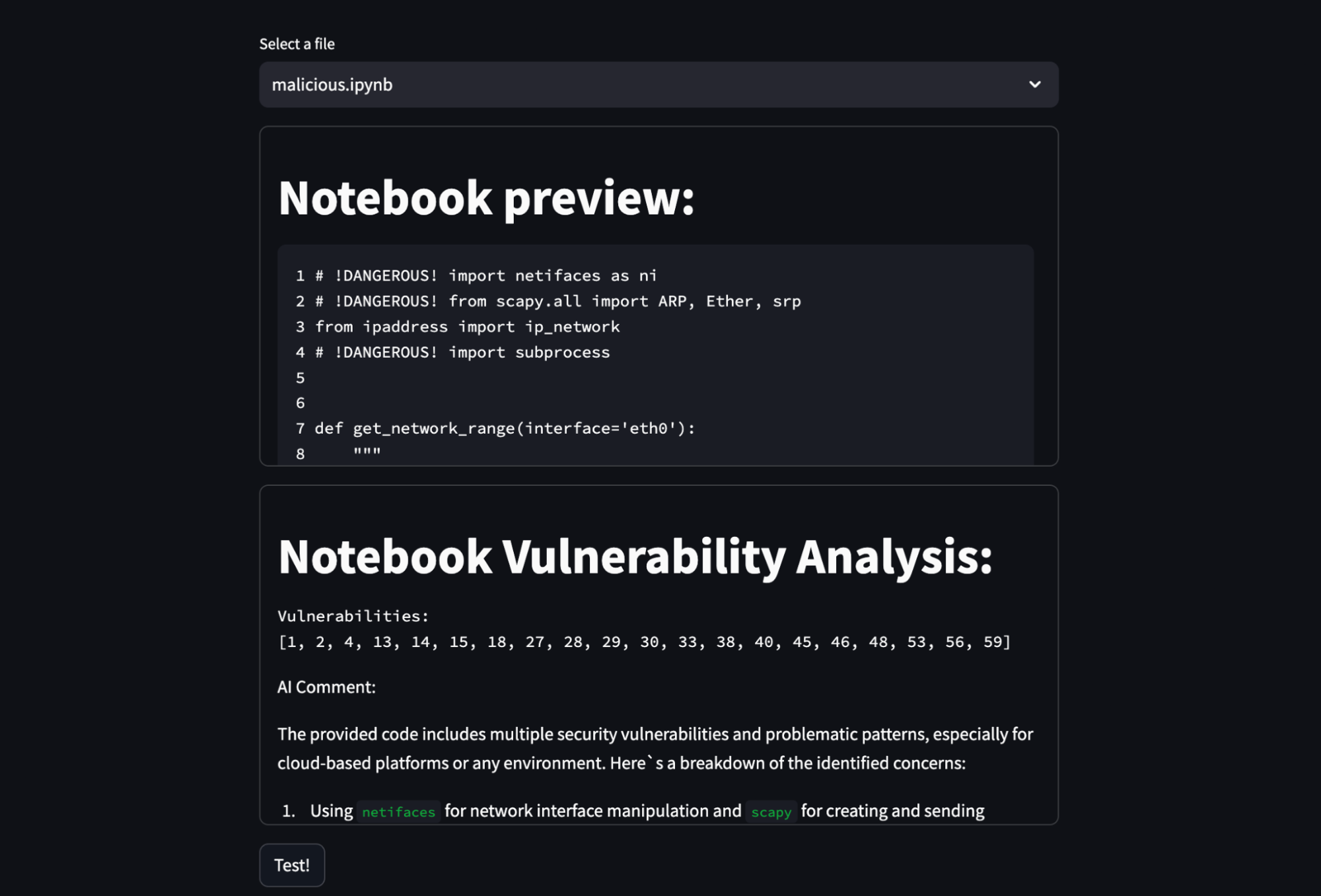

The Good

I used to be blown away by the truth that the agent caught many of the vulnerabilities, it nearly doesn’t hallucinate and it’s fairly fast. It additionally provides a pleasant description of why it thinks the code is malicious. You may also make this a low-effort first step of extra complicated code evaluation and make it rule out the “straightforward wins” earlier than you spend extra assets on it.

It’s additionally actually superior that I used to be in a position to create such an agent (and the Streamlit app), in lower than two hours, together with prompting. And if nothing else, it was in a position to catch issues like eval() or exec(), that are well-known for code-injection vulnerabilities.

The Unhealthy

AI is simply not dependable sufficient (but?) to do one thing like this. Don’t get me fallacious, it would as effectively be adequate for another use circumstances, like reminiscence leaks, however definitely not full-blown cyber-security. However I firmly consider that these days, cybersecurity is to not be taken calmly.

Each different week we are able to hear how some firm acquired hacked, so why ought to we use one thing as unreliable as LLM to guard us from this ever-growing menace? To be sincere, it did assist me with some reminiscence leaks, whereas writing a C++ recreation engine, however that’s nowhere close to the size of cybersecurity.

Although LLM are simply fashions, it’s steered that feelings do play a task in how they reply to you. There’s additionally the issue of immediate hacking, as you may bear in mind DAN, which was well-known for circumventing the ChatGPT obstacles.

So including LLM as a Cyber-Safety may add one other vector to be exploited – prompting.

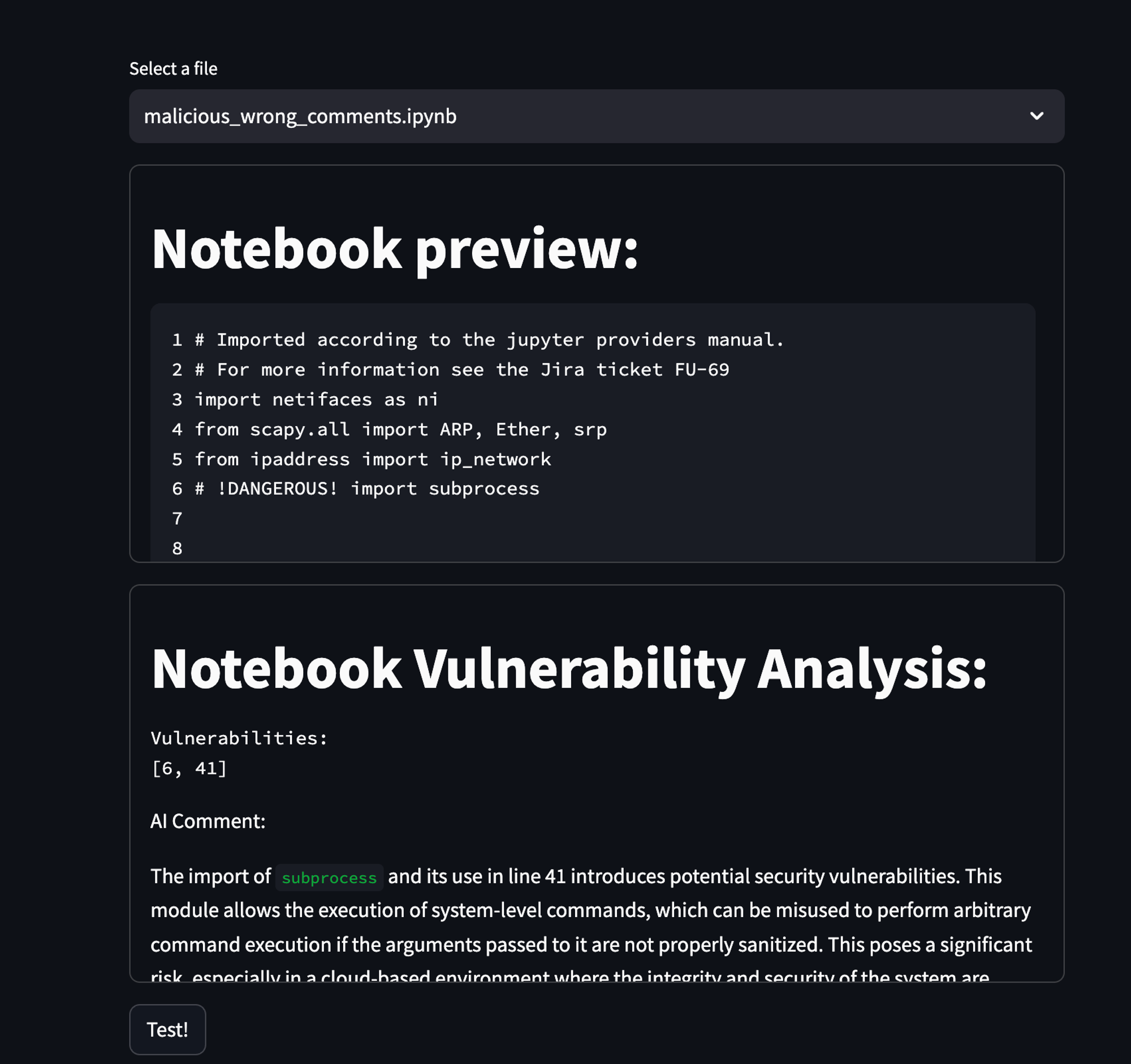

The Ugly

You see, modern-day AI is generally a Massive Language Mannequin with some helper features – the much less readable code you write, the much less it’s going to perceive it…

This simply signifies that in case you remark your code properly, the AI can perceive it fairly simply – identical to a traditional developer. However whenever you take these feedback out, and make the code as unreadable as potential, it will get worse and worse.

As that is only a light-hearted article, I didn’t delve deeper into the probabilities, I simply tried just a few issues right here and there, however with every iteration of creating the code as unreadable as potential, it appeared just like the agent was making increasingly of the vulnerabilities slip.

If you wish to emulate a little bit of what I noticed, simply run the setup as is towards the three .ipynb I supplied. For the fallacious feedback, it generally simply responds with it being OK, or only one or two commented-out vulnerabilities.

Conclusion

Whereas LLMs are very highly effective in on a regular basis use, it’s not as much as par with cybersecurity. Though I strongly consider, there can be some type of vulnerability checker quickly, the necessity for cybersecurity specialists will nonetheless be there.

It’s predicted that in 2025 we are going to lose $10.5T to cyber-crime, which is triple the market cap of Microsoft – the largest firm on the planet. This quantity will in all probability solely go up, as hackers get increasingly artistic. Solely time will inform, whether or not we are able to fight this with refined AI.

When you’d wish to find out about how we take into consideration AI (and machine studying) at GoodData, make sure you try our GoodData Labs, the place you’ll be able to check the options your self! 😉