We proudly announce that GoodData and dbt (Cloud) integration is now production-ready!

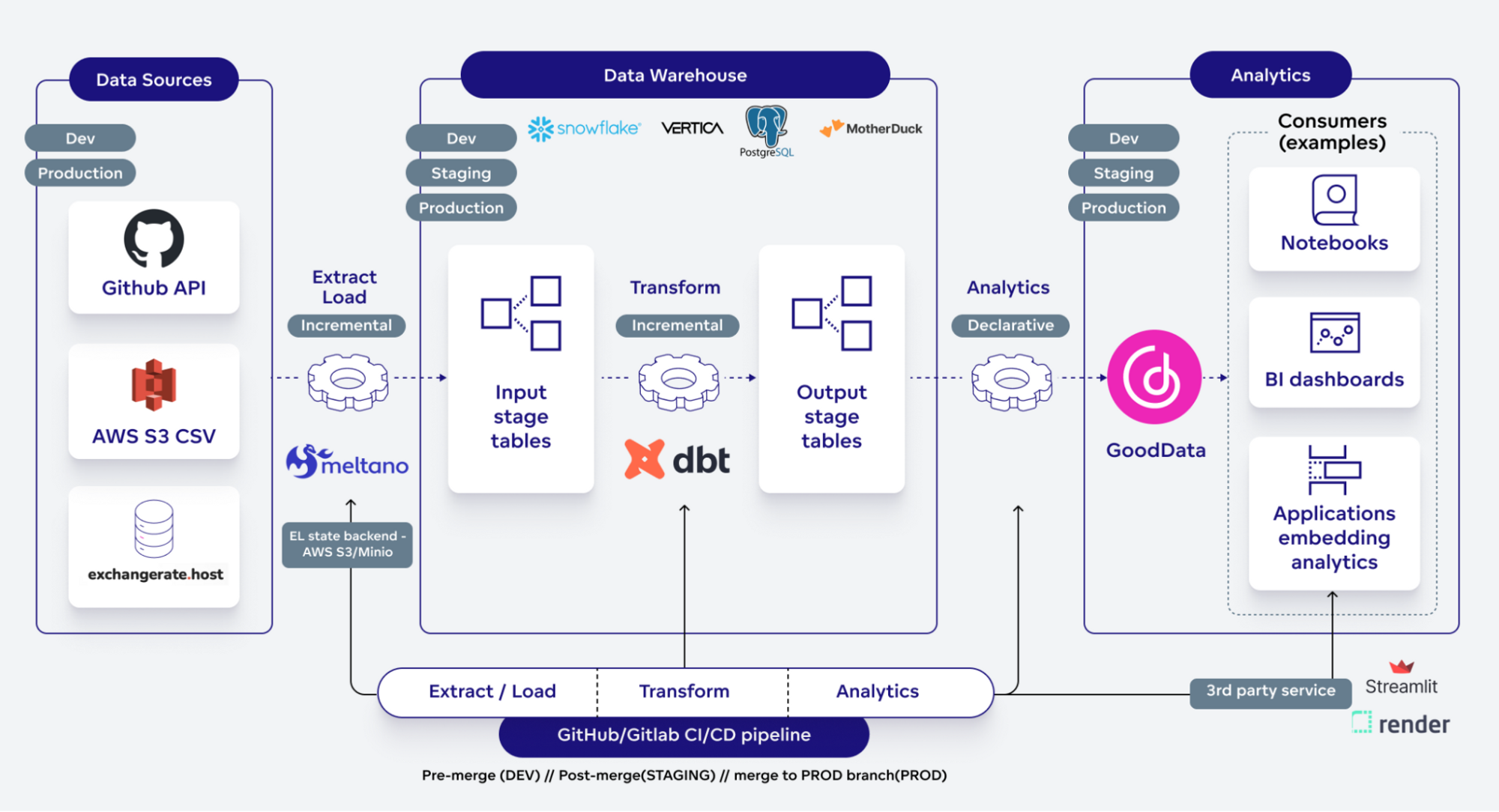

This text demonstrates all of the associated capabilities. The combination is a part of an information pipeline blueprint, already described in a number of articles:

We again it up with an open-source repository containing this knowledge stack:

Word: All hyperlinks to the open-source repo result in GitHub. Older articles level to the GitLab repository. They’re options – the information pipeline supply code is similar, however the CI pipeline definition is completely different.

Let’s be temporary, as lots of you’re doubtless accustomed to dbt.

dbt (by no means use upper-case!) grew to become a de facto customary for the T (Remodel) in ELT as a result of:

- It’s open-source, making it accessible and adaptable for a variety of customers

- Dbt Labs constructed an unbelievable group

- It’s straightforward to onboard and use – good documentation and examples

- Jinja macros – decoupling from SQL dialects, usually do something non-SQL

- First-class assist for superior T use circumstances, e.g., incremental fashions

As soon as dbt grew to become a “customary”, they expanded their capabilities by introducing metrics. First, they constructed them in-house, however they weren’t profitable. In order that they deprecated them, acquired Remodel, and embedded MetricFlow metrics into dbt fashions.

Customers can now execute metrics by APIs and retailer ends in a database. Many corporations compete on this space, together with GoodData – we’ll see if dbt succeeds right here.

dbt is now making an attempt to construct a SaaS product known as dbt Cloud. With among the options, like metrics, obtainable solely within the Cloud model of their product.

Dbt additionally holds dbt Coalesce convention yearly. We attended the final one, and I had a presentation there, briefly describing what I’ll discuss on this article.

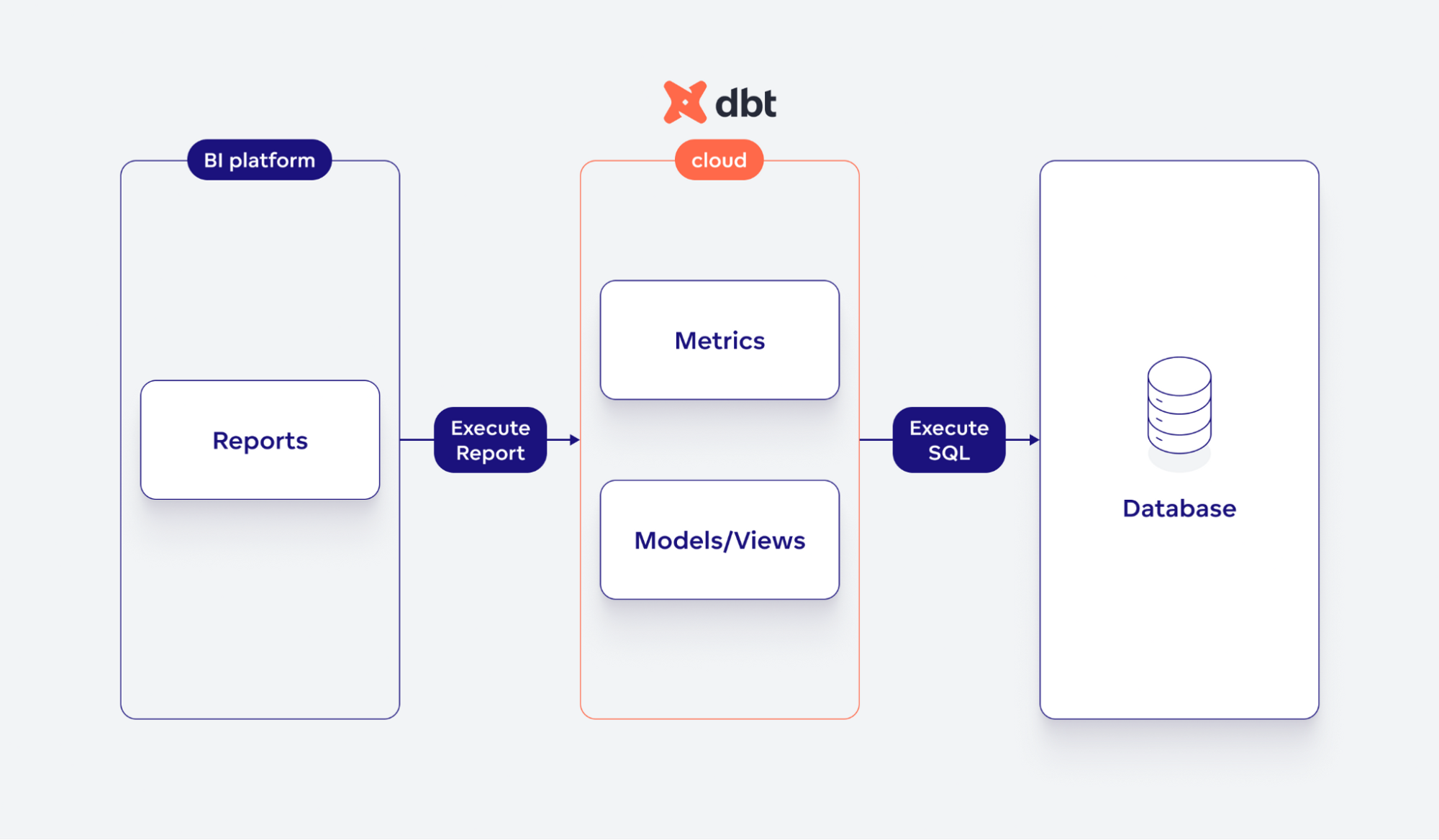

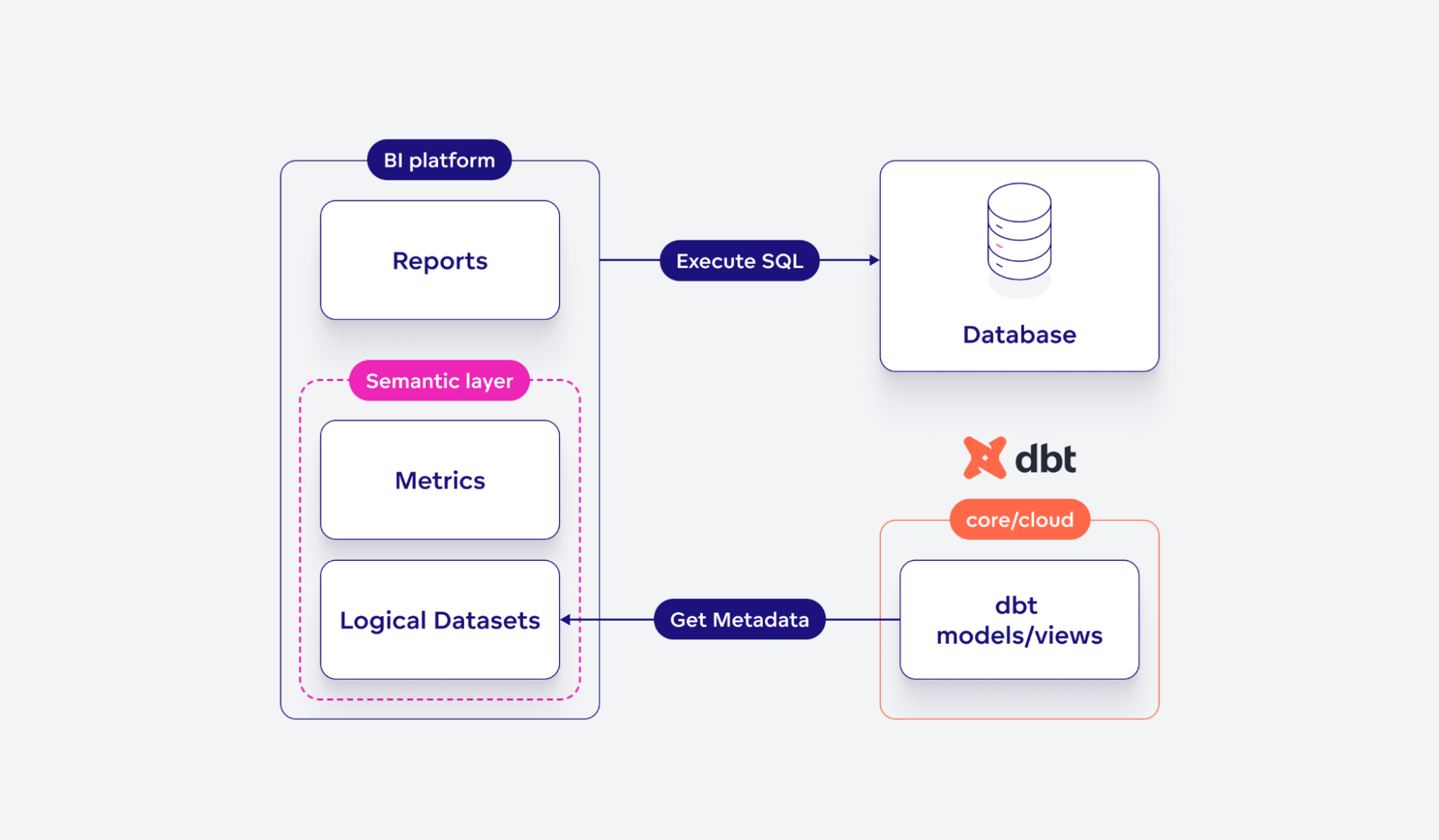

Usually, there are two choices for the way BI platforms can combine with dbt:

- Full integration with dbt Cloud, together with semantic layer APIs

- Partial, producing BI semantic layer from dbt fashions

The choice will depend on whether or not a BI platform comprises its semantic layer, which supplies important added worth in comparison with the dbt semantic layer. We consider that is the case with GoodData – we have now been growing our semantic layer for over ten years, whereas the dbt semantic layer went GA just a few months in the past.

Now we have additionally developed a complicated caching layer using applied sciences resembling Apache Arrow. I encourage you to learn How To Construct Analytics With Apache Arrow.

Nonetheless, the dbt semantic layer can mature over time, and adoption can develop. We already met one prospect who insisted on managing metrics in dbt (not as a result of they’re higher however due to different exterior dependencies). If increasingly more prospects strategy us this fashion, we might rethink this resolution. There are two potential subsequent steps:

- Mechanically convert dbt metrics to GoodData metrics

- Combine with dbt Cloud APIs, let dbt generate SQL and handle all executions/caching

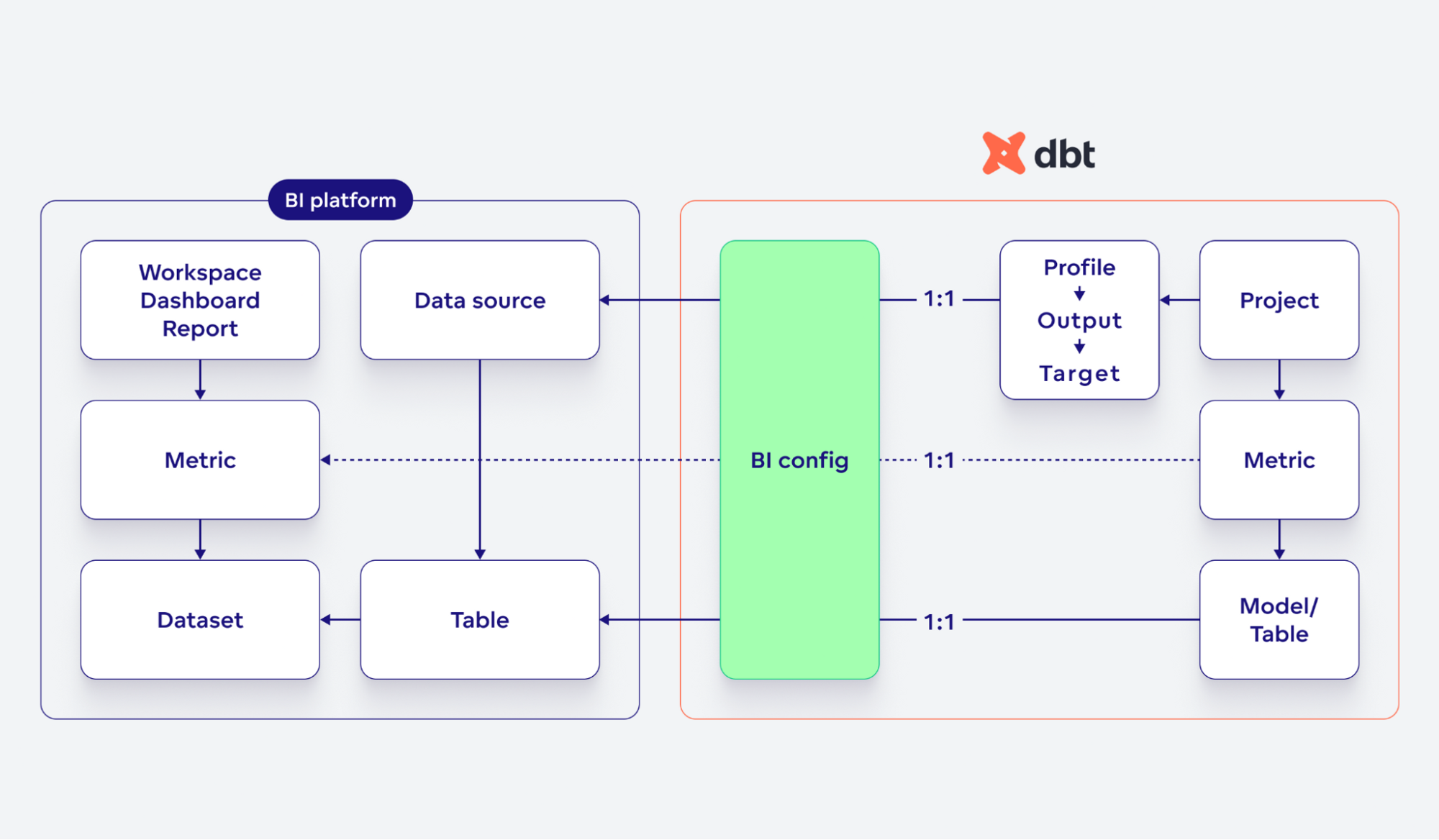

You’ll be able to evaluate the structure for every possibility:

The semantic layer, as we understand it, consists of two elements:

- Logical Knowledge Mannequin (LDM – datasets) on prime of the bodily knowledge mannequin(tables)

- Metrics aka Measures

Why waste time on LDM? We expect extending tables with semantic data is essential to permit finish customers to be self-service.

Particularly:

- Distinguish attributes, details, and date(time) dimensions so they’re utilized in the best context

- Decouple analytics objects (metrics, reviews, and many others…) from tables

- Transferring a column to a special desk. With LDM, you don’t need to replace analytical objects, simply the mapping between LDM and tables

On this case, we wish to generate our LDM(datasets) from dbt fashions(tables). Then customers can create our MAQL metrics conveniently – due to LDM, we are able to present a extra highly effective IntelliSense, e.g., we propose attributes/details in related positions in metrics or recommend filter values.

Furthermore, we generate definitions of information sources from the dbt profile file(from targets/outputs), so customers don’t have to redundantly specify knowledge supply properties(host, port, username, …).

Here’s a large image describing relationships between dbt and BI entities:

So, what does the mixing seem like?

We at all times give attention to builders first. We prolonged our (open-source) Python SDK as a result of Python language is the first-class citizen within the dbt world.

We launched a brand new library, gooddata-dbt, based mostly on the gooddata-sdk core library.

How can builders embed the brand new library into their knowledge pipelines?

We prolonged the knowledge pipeline blueprint accordingly to reveal it. The library now supplies gooddata-dbt CLI, which you’ll be able to execute in a pipeline. The CLI has all the required operations builders want. The CLI accepts numerous (documented) arguments and a configuration (YAML) file.

Configuration

You will discover the instance configuration file right here. Let’s break it down.

The blueprint recommends delivering into a number of environments:

- DEV – earlier than the merge, helps code evaluations

- STAGING – after the merge, helps enterprise testers

- PROD – merge to a devoted PROD department, helps enterprise finish customers

Corresponding instance configuration:

environment_setups:

- id: default

environments:

- id: growth

title: Growth

elt_environment: dev

- id: staging

title: Staging

elt_environment: staging

- id: manufacturing

title: Manufacturing

elt_environment: prod

It’s potential to outline a number of units of environments and hyperlink them to varied knowledge merchandise.

You then configure so-called knowledge merchandise. They symbolize an atomic analytical answer you wish to expose to finish customers. The CLI creates a workspace (remoted area for finish customers) for every mixture of information product and setting, e.g., Advertising(DEV), Advertising(STAGING), …, Gross sales(DEV), …

Corresponding instance configuration:

data_products:

- id: gross sales

title: "Gross sales"

environment_setup_id: default

model_ids:

- salesforce

localization:

from_language: en

to:

- locale: fr-FR

language: fr

- locale: zh-Hans

language: "chinese language (simplified)"

- id: advertising and marketing

title: "Advertising"

environment_setup_id: default

model_ids:

- hubspot

# If execution of an perception fails, and also you want a while to repair it

skip_tests:

- "<insight_id>"

You hyperlink every knowledge product with the corresponding setting setup. Mannequin IDs hyperlink to corresponding metadata in dbt fashions. The localization part lets you translate workspace metadata to varied languages mechanically. With the skip_tests possibility, you possibly can quickly skip testing damaged insights(reviews), which supplies you (and even us if there’s a bug in GoodData) time to repair it.

Lastly, you possibly can outline which GoodData organizations (DNS endpoints) you wish to ship the associated knowledge merchandise. It’s non-obligatory – it’s nonetheless potential to outline GOODDATA_HOST and GOODDATA_TOKEN env variables and ship the content material to just one group.

organizations:

- gooddata_profile: native

data_product_ids:

- gross sales

- gooddata_profile: manufacturing

data_product_ids:

- gross sales

- advertising and marketing

You’ll be able to ship knowledge merchandise to a number of organizations. Every group can include a special (sub)set of merchandise. The gooddata_profile property factors to a profile outlined in gooddata_profiles.yaml file. You will discover extra in our official documentation.

Provisioning workspaces

Workspaces are remoted areas for BI enterprise finish customers. The CLI provisions a workspace for every knowledge product and setting, e.g., Advertising(STAGING):

gooddata-dbt provision_workspaces

Registering knowledge sources

We register an information supply entity in GoodData for every output within the dbt profiles.yml file. It comprises all properties crucial to connect with the corresponding database.

gooddata-dbt register_data_sources $GOODDATA_UPPER_CASE --profile $ELT_ENVIRONMENT --target $DBT_TARGET

In case you fill GOODDATA_UPPER_CASE with “–gooddata-upper-case,” the plugin expects that the DB object names (tables, columns, …) are uncovered upper-cased from the database. At present, that is the case just for Snowflake.

–profile and –goal arguments level to the profile and the goal contained in the profile within the dbt_profiles.yml file, from which the GoodData knowledge supply entity is generated.

Producing Logical Knowledge Mannequin(LDM)

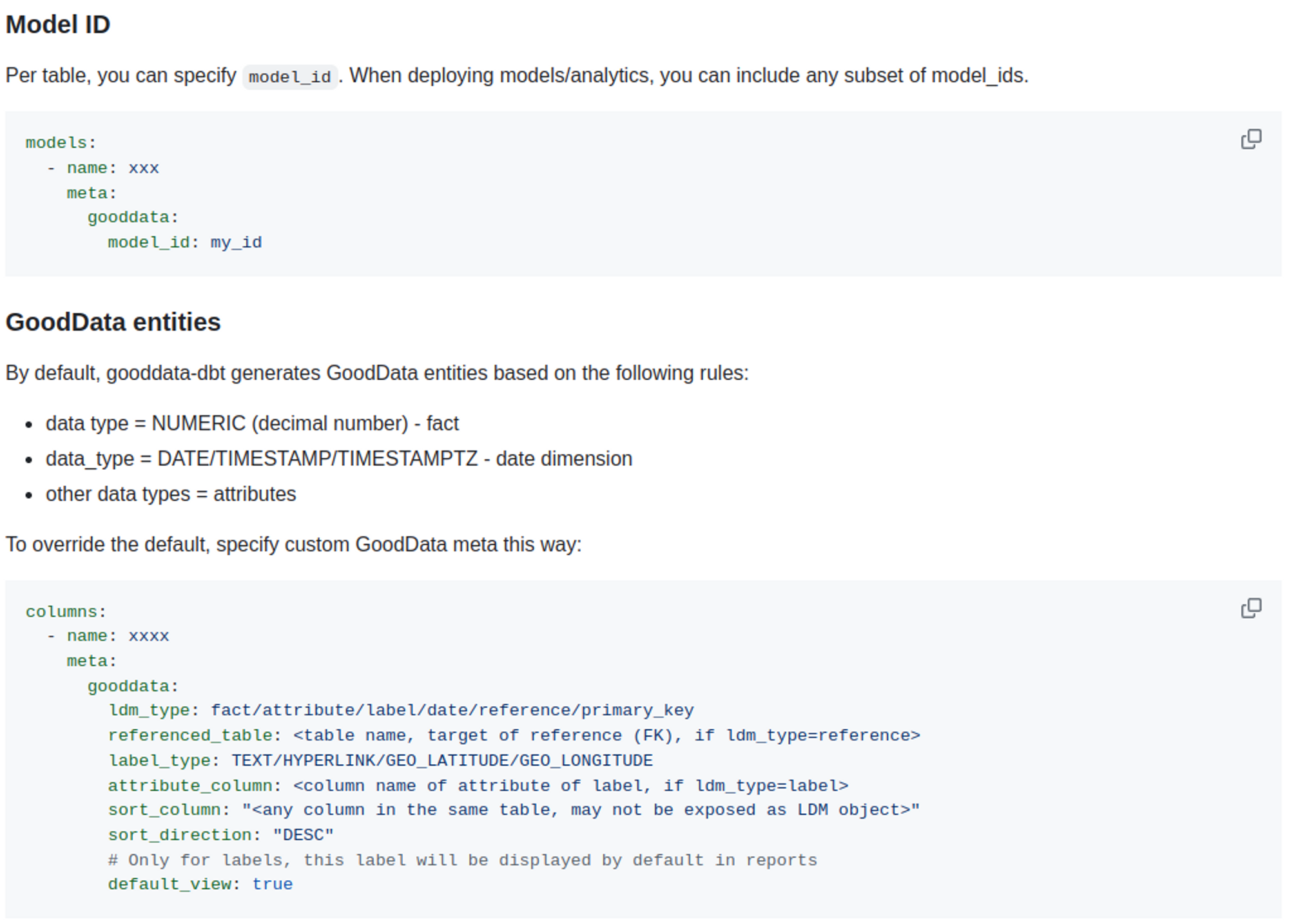

The CLI generates a logical dataset for every dbt mannequin(desk). The configuration (see above) defines which tables are included (labeled by a model_id within the meta part). You’ll be able to enhance the accuracy of the technology by setting GoodData-specific metadata in corresponding dbt mannequin information(DOC within the repo).

Find out how to execute it:

gooddata-dbt deploy_ldm $GOODDATA_UPPER_CASE --profile $ELT_ENVIRONMENT --target $DBT_TARGET

The which means of arguments is similar as within the earlier chapter.

Invalidating GoodData caches

When dbt executions replace the tables included within the LDM, GoodData caches are now not legitimate. So when you execute dbt CLI and replace the information, it’s essential to invalidate corresponding caches in GoodData.

Find out how to invalidate:

gooddata-dbt upload_notification --profile $ELT_ENVIRONMENT --target $DBT_TARGET

The which means of the arguments is similar as within the earlier chapter

Finish-to-end instance

We have to:

- Construct a customized dbt docker picture, together with the wanted plugins

- Execute dbt fashions with dbt-core or dbt Cloud(API)

- Provision the associated GoodData artifacts (Workspaces, LDM, DataSource definitions)

Right here is the supply code of the associated GitHub workflow:

on:

workflow_call:

inputs:

# ...

jobs:

reusable_transform:

title: remodel

runs-on: ubuntu-latest

setting: $

container: $

env:

GOODDATA_PROFILES_FILE: "$"

# ...

steps:

# ... numerous preparation jobs

- title: Run Remodel

timeout-minutes: 15

if: $false

run: |

cd $

dbt run --profiles-dir $ --profile $ --target $ $

dbt check --profiles-dir $ --profile $ --target $

- title: Run Remodel (dbt Cloud)

timeout-minutes: 15

if: $false

env:

DBT_ACCOUNT_ID: "$"

# ...

run: |

cd $

gooddata-dbt dbt_cloud_run $ --profile $ --target $

- title: Generate and Deploy GoodData fashions from dbt fashions

run: |

cd $

gooddata-dbt provision_workspaces

gooddata-dbt register_data_sources $ --profile $ --target $

gooddata-dbt deploy_ldm $ --profile $ --target $

# Invalidates GoodData caches

gooddata-dbt upload_notification --profile $ --target $

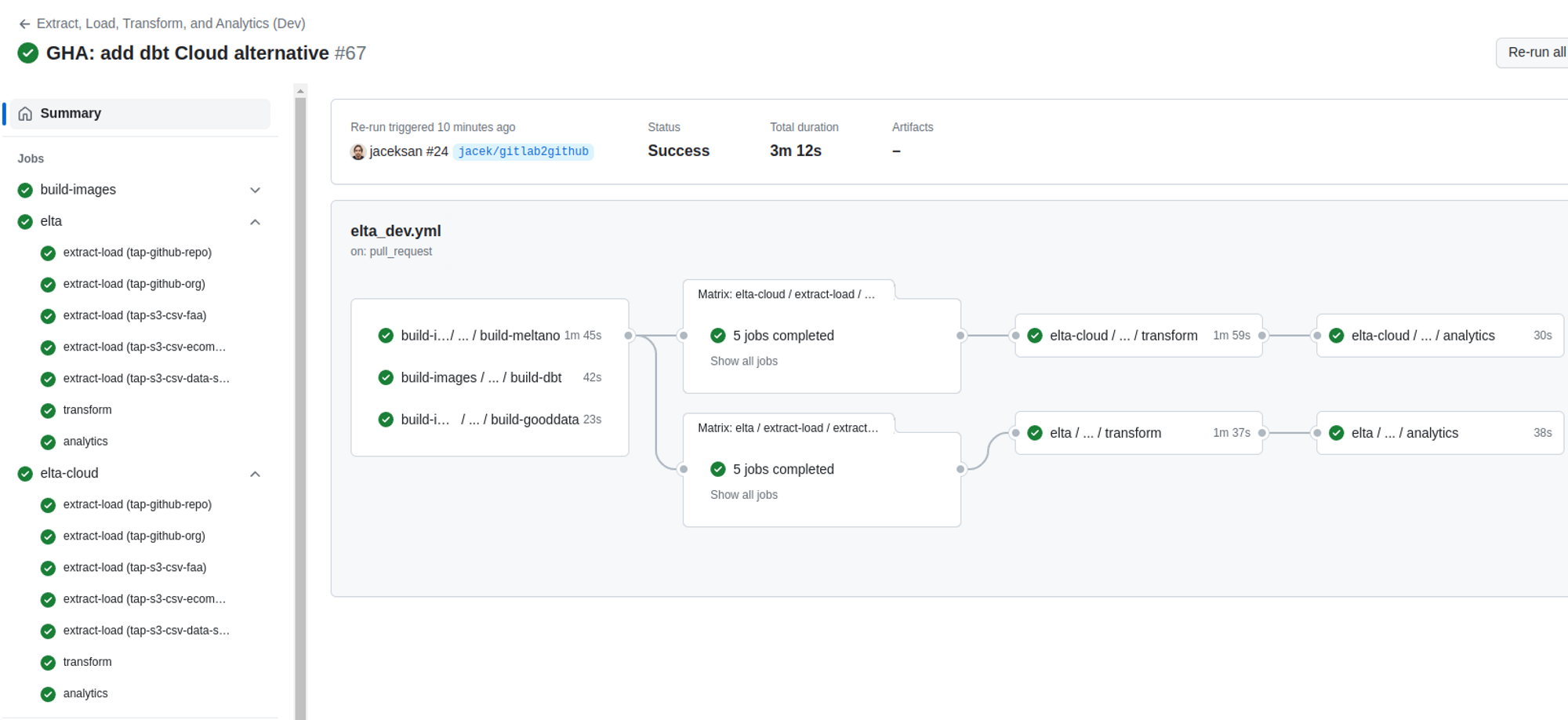

The total supply code will be discovered right here. It’s a reusable workflow triggered by different workflows for every “context” – setting(dev, staging, prod) and dbt-core/dbtCloud.

Because of the reusability of workflows, I can outline scheduled executions merely as:

title: Extract, Load, Remodel, and Analytics (Staging Schedule)

on:

schedule:

- cron: "00 4 * * *"

jobs:

elta:

title: ELTA (staging)

makes use of: ./.github/workflows/reusable_elta.yml

with:

ENVIRONMENT: "staging"

BRANCH_NAME: "important"

DEPLOY_ANALYTICS: "false"

secrets and techniques: inherit

Reminding you once more: dbt fashions don’t present all of the semantic properties that mature BI platforms want. For instance:

- Each enterprise title and outline for Desk/column names (solely description is supported)

- Distinguish between attributes and details (and so-called labels within the case of GoodData)

- Major/international keys – dbt supplies it solely through exterior libs, and it’s not absolutely clear

We determined to make the most of meta sections in dbt fashions to permit builders to enter all of the semantic properties. The total specification of what’s potential will be discovered right here. We’re constantly extending it with new options – if you’re lacking something, please don’t hesitate to contact us in our Slack group.

The gooddata-dbt library (and the CLI) is predicated on our Python SDK, which might talk with all our APIs and supply higher-level functionalities.

Relating to the dbt integration – we determined to parse profiles.yml and manifest.json information. Sadly, there is no such thing as a higher-level “API” uncovered by dbt – nothing like our Python SDK. dbt Cloud supplies some associated APIs, however they don’t expose all of the metadata we want (which is saved within the manifest.json file).

First, we learn the profiles and manifest them to Python knowledge courses. Then, we generate GoodData DataSource/Dataset knowledge courses, which will be posted to a GoodData backend by Python SDK strategies (e.g., create_or_update_data_source).

By the best way – the end-to-end knowledge pipeline additionally delivers analytical objects (metrics, insights/reviews, dashboards) in a separate job. The gooddata-dbt CLI supplies a definite technique (deploy_analytics) for it. Furthermore, it supplies a test_insights technique that executes all insights and subsequently validates that every one insights are legitimate from a metadata consistency viewpoint and are executable. It might be straightforward to create outcomes fixtures and check that even outcomes are legitimate.

We combine with dbt Cloud APIs as nicely. Gooddata-dbt plugin can set off a job in dbt Cloud as a substitute for operating dbt-core domestically. It collects all crucial metadata (manifest.json, equal to profiles.yml), so the GoodData SDK can put it to use prefer it does when operating dbt-core domestically.

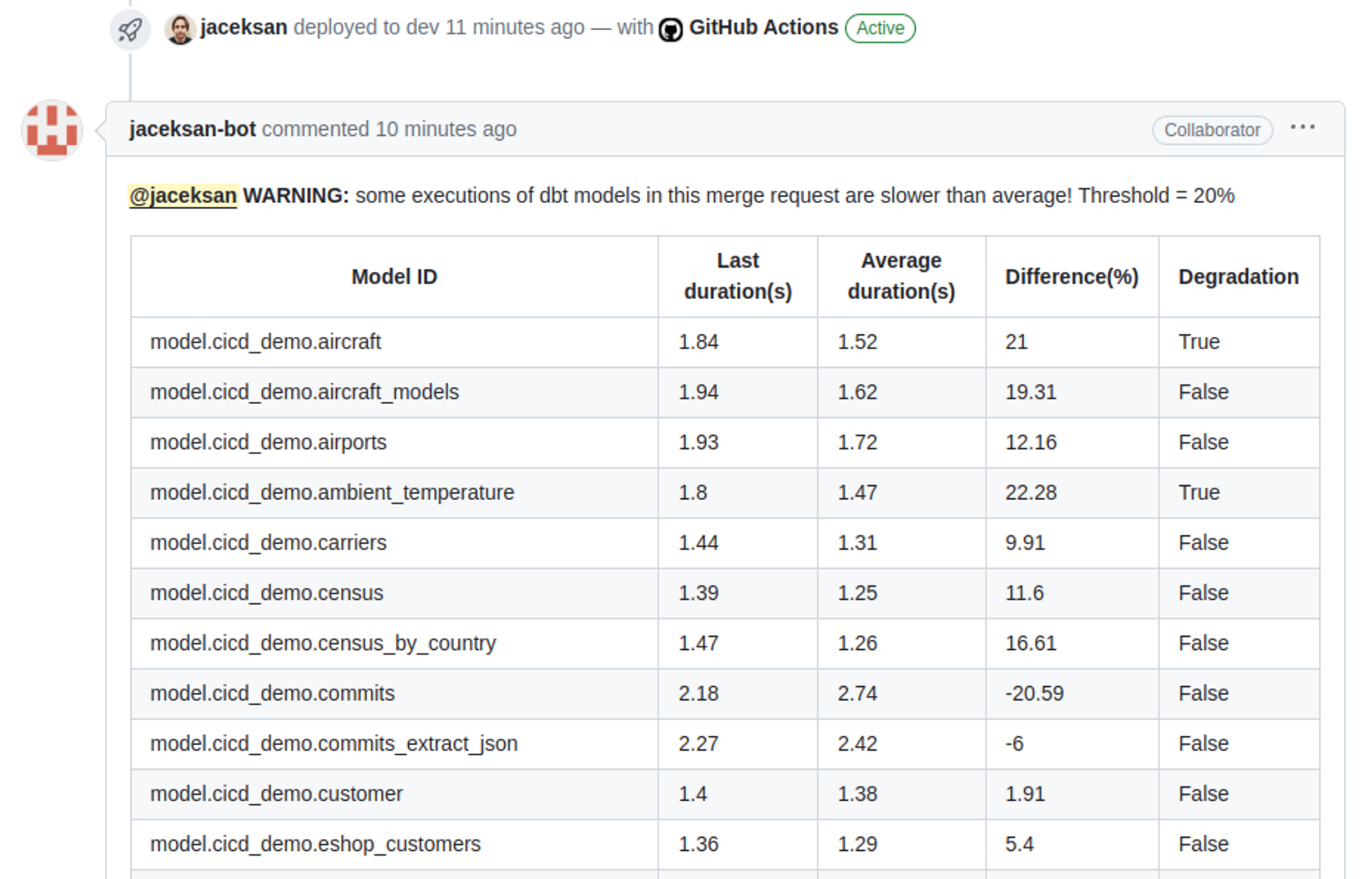

Furthermore, We applied integration with extra dbt Cloud APIs, offering details about the historical past of executions. We built-in it with a perform that may add feedback to GitLab or GitHub. The use case right here is to inform builders concerning the efficiency regressions.

We prolonged the already talked about demo already talked about accordingly. As a facet impact, there’s now a substitute for the GitLab CI pipeline – the GitHub Actions pipeline. Now all steps (extract, load, remodel, analytics) are operating twice in opposition to completely different databases in a Snowflake warehouse – one with dbt-core and one with dbt Cloud:

The notification about efficiency degradations is populated as a GitHub remark by my alter-ego bot:

Advantages from dbt Cloud:

- You don’t have to run dbt-core in employees of git distributors

- You don’t have to construct a customized dbt docker picture with a particular set of plugins

- Metadata, e.g., about executions, can be utilized for precious use circumstances (e.g., already talked about notifications about efficiency regressions)

- dbt Cloud superior options. I just like the lately launched dbt Discover characteristic most – it supplies actual added worth for enterprise options consisting of a whole bunch of fashions.

Ache factors when integrating dbt Cloud:

- It’s a must to outline variables twice. For instance, DB_NAME is required not solely by dbt but additionally by extract/load device (Meltano, in my case) and BI instruments. You aren’t alone within the knowledge stack!

- No Python SDK. It’s a must to implement wrappers on prime of their APIs. They need to encourage themselves by our Python SDK – present OpenAPI spec, generate Python purchasers, and supply an SDK on prime of that 😉

As already talked about:

- Combine all dbt Cloud APIs, and let dbt generate and execute all analytical queries (and cache outcomes)

We’re carefully watching the maturity and adoption of the dbt semantic layer - Undertake extra related GoodData analytical options within the gooddata-dbt plugin

Additionally, my colleagues are intensively engaged on another strategy to the GoodData Python SDK – GoodData for VS Code. They supply not solely an extension but additionally a CLI. The purpose is similar: an as-code strategy.

However the developer expertise can go one degree larger – think about you possibly can preserve dbt and GoodData code in the identical IDE with full IntelliSense and cross-platform dependencies. Think about you delete a column from a dbt mannequin, and all associated metric/report/dashboard definitions turn into invalid in your IDE! Technically, we aren’t that removed from this dream.

Basically, strive our free trial.

In case you are concerned with making an attempt GoodData experimental options, please register for our Labs setting right here.