That is a part of a sequence about our FlexQuery and the Longbow engine powering it.

You will have heard about DuckDB, Apache Arrow, or each. On this article, I’ll let you know about how we (GoodData) are the primary analytics (BI) platform powered by the mixture of those applied sciences. I imagine the motivation is obvious – efficiency 🏎️ and developer velocity.

Additionally, to reply the query about DuckDB I discovered on Reddit, sure, we’re utilizing it.

This entire mixture was doable due to the FlexQuery and the Longbow engine behind it. If you want to study extra about them, see the opposite elements of the sequence, just like the FlexQuery introduction, or the structure of the Longbow mission.

A quick introduction to DuckDB

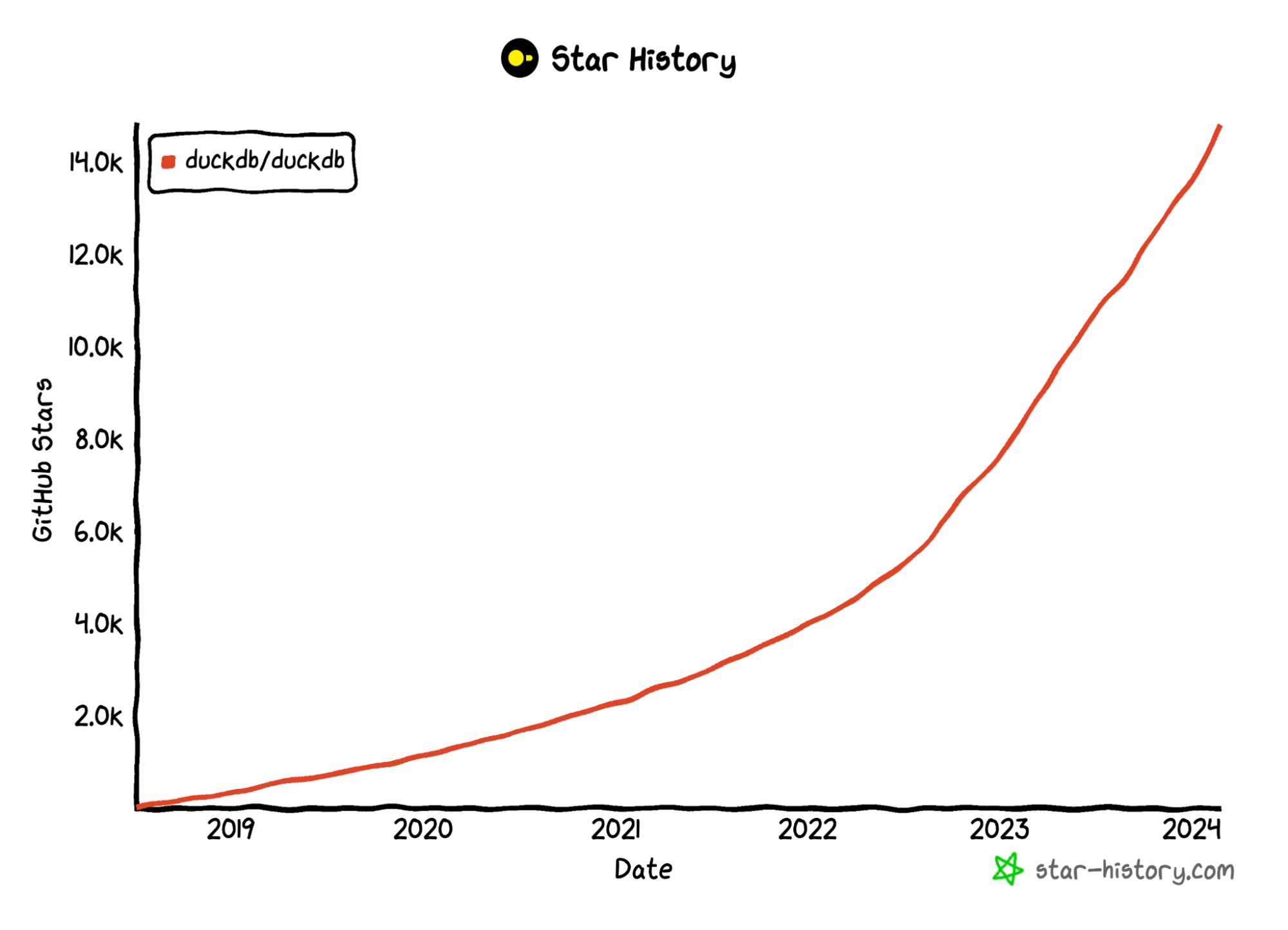

DuckDB is an open-source, in-process analytical database. That has an incredible group, and a product referred to as MotherDuck is constructed on prime of it. It’s quick and feature-rich. As well as, it supplies superior options that assist engineers of their day-to-day routine. Let me offer you a fast instance.

ATTACH 'sqlite:sakila.db' AS sqlite;

ATTACH 'postgres:dbname=postgresscanner' AS postgres;

ATTACH 'mysql:consumer=root database=mysqlscanner' AS mysql;

CREATE TABLE mysql.movie AS FROM sqlite.movie;

CREATE TABLE postgres.actor AS FROM sqlite.actor;

SELECT first_name, last_name

FROM mysql.movie

JOIN sqlite.film_actor ON (movie.film_id = film_actor.film_id)

JOIN postgres.actor ON (actor.actor_id = film_actor.actor_id)

WHERE title = 'ACE GOLDFINGER';One of many newest superior options is attaching a number of databases similar to Postgres or MySQL and querying on prime of them. Hyperlink to the weblog put up presenting the characteristic.

DuckDB additionally has a versatile extension mechanism that permits for dynamic loading extensions. Extensibility, usually, is a superb factor, and it exhibits that the expertise is developer-friendly.

A Transient introduction to Apache Arrow

Apache Arrow is an open-source growth platform for in-memory analytics. It supplies a standardized, language-independent columnar reminiscence format for flat and hierarchical knowledge, organized for environment friendly analytic operations on trendy {hardware}. Knowledge motion relies on the environment friendly FlightRPC protocol. If you would like to study extra about this protocol, you should definitely try our Arrow Flight RPC 101 article.

The success of Apache Arrow will be confirmed by its adoption or the ecosystem constructed round it. Let me offer you a fast instance. Everybody who works with Python and knowledge is aware of the Pandas library. The primary launch of Pandas was in 2008. Since then, Pandas has come a great distance, and in 2024, Apache Arrow will turn into a required dependency of Pandas. One other instance is the Polars library, Pandas different written in Rust, which makes use of Apache Arrow because the backend from the start.

Apache Arrow is undoubtedly an superior expertise. Does it imply every little thing is brilliant and glossy with it? Nicely, not precisely. Although the expertise is superb, the core of Apache Arrow and the training curve will be steep for newcomers. This notion is echoed, for instance, on Reddit. Not way back, I used to be searching Reddit and stumbled upon a put up about PyArrow (Apache Arrow adoption for Python) lacking tutorials and assets, which I can verify, as I’ve skilled this first-hand.

How we make the most of these applied sciences

We make the most of Apache Arrow to implement an analytics lake. Initially, we began with a cache (storage) layer between the info warehouse and underlying knowledge for analytics objects (dashboards, visualizations, and so forth.). Because of the modular structure of our system and Flight RPC protocol, it’s straightforward to construct and deploy knowledge providers. They are often within the type of a module or an operation inside a module. You will discover extra detailed data within the Longbow structure article by Lubomir (lupko) Slivka.

Essentially the most handy knowledge service you would possibly consider is executing SQL instantly on caches. We came upon that DuckDB is one of the best match for this, because it has been appropriate with Apache Arrow since 2021. Once you learn DuckDB documentation, you’ll see that there’s a devoted Arrow extension, though it’s non-compulsory for integration with Apache Arrow. Importantly, DuckDB helps native integration with Apache Arrow.

import duckdb

import pyarrow as pa

my_arrow_table = pa.Desk.from_pydict({'i': [1, 2, 3, 4],

'j': ["one", "two", "three", "four"]})

outcomes = duckdb.sql("SELECT j FROM my_arrow_table").arrow()With DuckDB and Apache Arrow, we will see vital velocity and reminiscence effectivity due to the zero-copy mechanism enabled by integrating these instruments.

Presently, we use a mixture of those applied sciences within the manufacturing. The entire magic is hidden in our method to analytics with CSV recordsdata. First, CSVs are uploaded to sturdy storage like AWS S3, the place we carry out evaluation instantly on prime of those recordsdata. We derive knowledge varieties, and primarily based on them, we resolve whether or not the column represents an attribute, a truth, or a date. Customers can then manually change the info varieties to their liking. After this, our platform treats CSVs as a typical knowledge supply and performs SQL queries utilizing DuckDB. Nonetheless, that is only the start. We plan to make the most of the mixing of DuckDB and Apache Arrow much more. Keep tuned, as extra updates are on their manner.

Future

Wanting forward, there are lots of future steps that may be thought-about. Nonetheless, I’d like to focus on solely two: Pre-aggregations and knowledge federation.

Pre-aggregations

Think about having quite a few caches, every produced by an SQL question. Nonetheless, querying a knowledge warehouse is pricey. So, the thought behind pre-aggregations is that SQL queries are analyzed, and the output of the evaluation, within the best-case situation, is a single SQL question – minimizing direct queries to the info warehouse.

Let’s name this the “mom” question, which shall be used to question a knowledge warehouse – producing the “mom” cache. We will derive the identical outcomes from this cache utilizing DuckDB, for instance, as we might by executing SQL queries individually. Pre-aggregations will be additional optimized by contemplating bodily and utilization stats about caches.

Fairly simple, no? Nicely, not precisely. The arduous half is to create the “mom” question. We already make the most of Apache Calcite, which helps us assemble and analyze SQL queries, and we might use it within the case of pre-aggregations as properly. Or this could possibly be dealt with by DuckDB as an extension. Or maybe AI could possibly be concerned? We plan to discover all of those approaches.

Knowledge Federation

Knowledge federation pertains to pre-aggregations. There are a number of methods to method knowledge federation. One in every of them is, for instance, utilizing the pre-aggregations talked about above. Think about that as an alternative of 1 “mom” question, you can also make a number of of them by pointing to totally different knowledge sources after which working DuckDB. The opposite manner is to make the most of DuckDB’s extensions and connect databases.

Wish to study extra?

As I discussed within the introduction, that is a part of a sequence of articles the place the GoodData dev workforce takes you on a journey of how we constructed our new analytics stack on prime of Apache Arrow and what we discovered about it within the course of.

Different elements of the sequence are concerning the introduction of FlexQuery, particulars concerning the versatile storage and caching, and final however not least, the Longbow Venture itself!

If you wish to see how properly all of it works in apply, you may attempt the GoodData free trial! Or for those who’d wish to attempt our new experimental options enabled by this new method (AI, Machine Studying, and rather more), be at liberty to join our Labs Setting.

In case you’d like to debate our analytics stack (or the rest), be at liberty to affix our Slack group!