How can we apply AI to merchandise we construct? We’ve been asking this query right here at GoodData and we’ve constructed quite a few PoCs over the past yr to search out the reply. We’ve even held a hackathon with a number of AI-based initiatives within the lineup. On this article I’ll be sharing our household recipe for a easy AI assistant that you may make at dwelling.

LLM (Giant Language Mannequin) might be essentially the most hyped expertise in the mean time, and for a great purpose. Typically it looks like magic. However, actually, its elements have been identified for many years: textual content tokenization, textual content embedding and neural community. So why is it getting momentum solely now?

By no means earlier than has a language mannequin been educated on such an enormous quantity of knowledge. What’s much more spectacular to me is how accessible LLM has turn out to be to of us such as you and me.

If you understand how to make a REST API name – you may construct an AI assistant.

No want to coach your individual mannequin, no must deploy it wherever. It’s tremendous simple to cook-up a PoC to your particular software program product utilizing the likes of OpenAI. All you must observe alongside are some Entrance-end improvement expertise and slightly free time.

The principle ingredient: LLM

The principle ingredient is, in fact, the LLM itself. The oldsters at OpenAI did an awesome job designing Chat Completion REST API. It’s easy, stateless, but very highly effective. You need to use it with a framework, like LangChain, however the uncooked REST API is greater than sufficient, particularly if you wish to go serverless.

A serverless chat app may be very simple to construct, nevertheless it’s extra of a PoC instrument. There are safety implications in exposing your OpenAI Token within the browser atmosphere, so at very least you’ll should construct an authenticating proxy if you wish to use this in manufacturing.

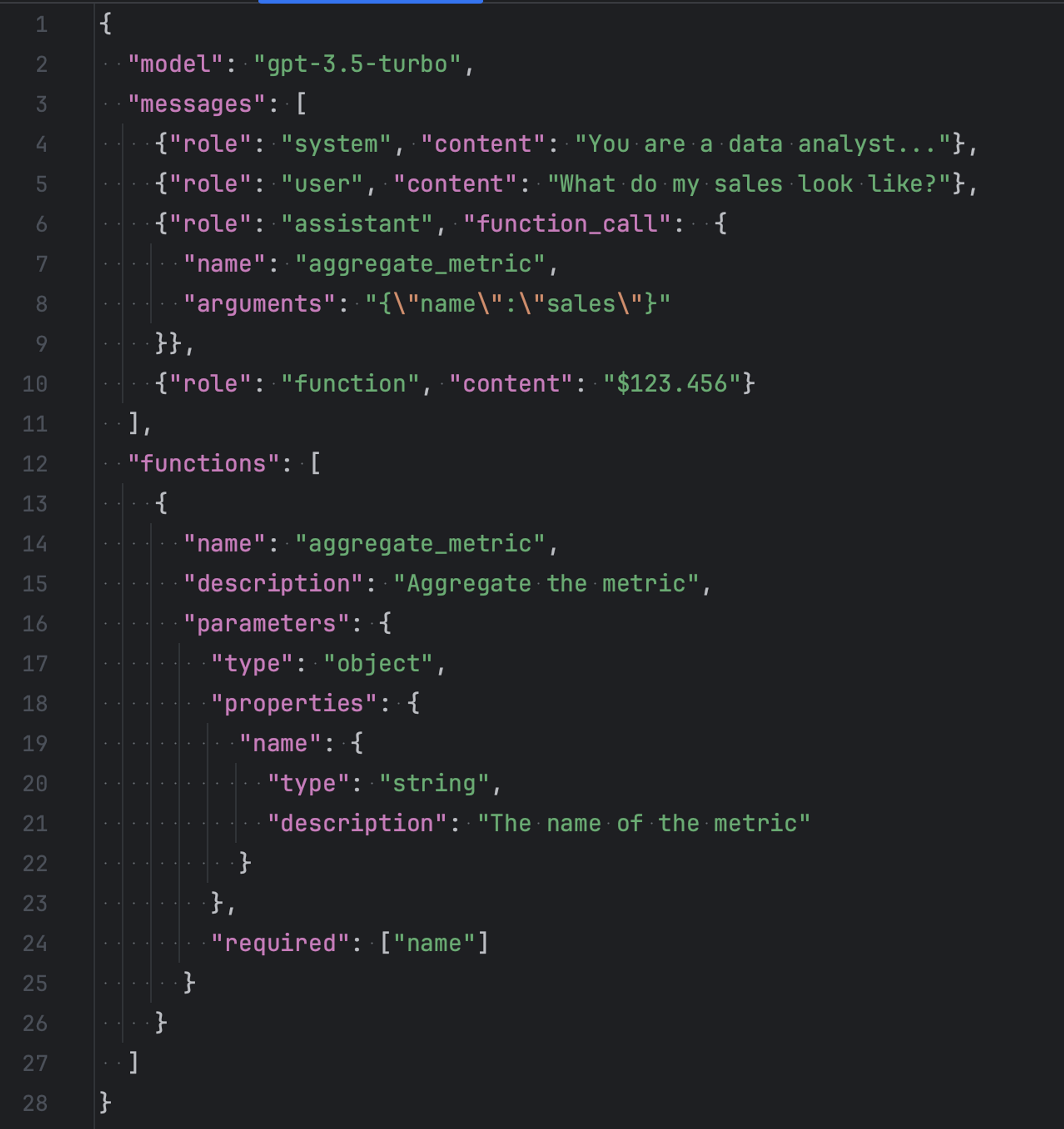

Let’s have a more in-depth take a look at the request to OpenAI Chat Completion API.

First we have to specify a mannequin for use for this request. gpt-3.5-turbo is an efficient default. If you must work with a extra complicated textual content and also you don’t care that a lot about velocity and price – attempt gpt-4 as a substitute.

Subsequent, now we have the messages discipline holding the dialog historical past. And that is the primary instrument that you should use to make the assistant your individual and never only a generic conversationalist. You may give the context to the dialog utilizing a system message. For instance, in my case I inform the chat concerning the information analytical workspace the consumer is at present logged into in GoodData, what sort of metrics and dimensions can be found and how much questions the assistant ought to be capable to reply.

Lastly, the features discipline holds the outline of features that LLM might ask us to run on the consumer’s behalf. Consider it as a bridge between generic pure language capabilities of the LLM and your domain-specific logic. For instance, in a knowledge analytics resolution we will outline a “aggregate_metric” operate which may be known as if the consumer asks the chat concerning the worth of some metric.

OpenAI just lately launched a brand new Assistant API that appears very promising. I’m not protecting it right here as a result of it’s nonetheless in beta and we don’t actually need all of the options for the only instances described in right here.

The recipe

The chat software isn’t any extra complicated than another frontend app. Listed below are the elements you’ll want:

- Redux retailer or different state machine to carry the dialog historical past.

- A retailer middleware that listens to new messages and communicates with OpenAI when essential.

- One other middleware that listens to new messages and triggers a operate execution when wanted.

- A superb measure of React/Angular/Vue (to style) to construct the UI.

Let’s see the way it works.

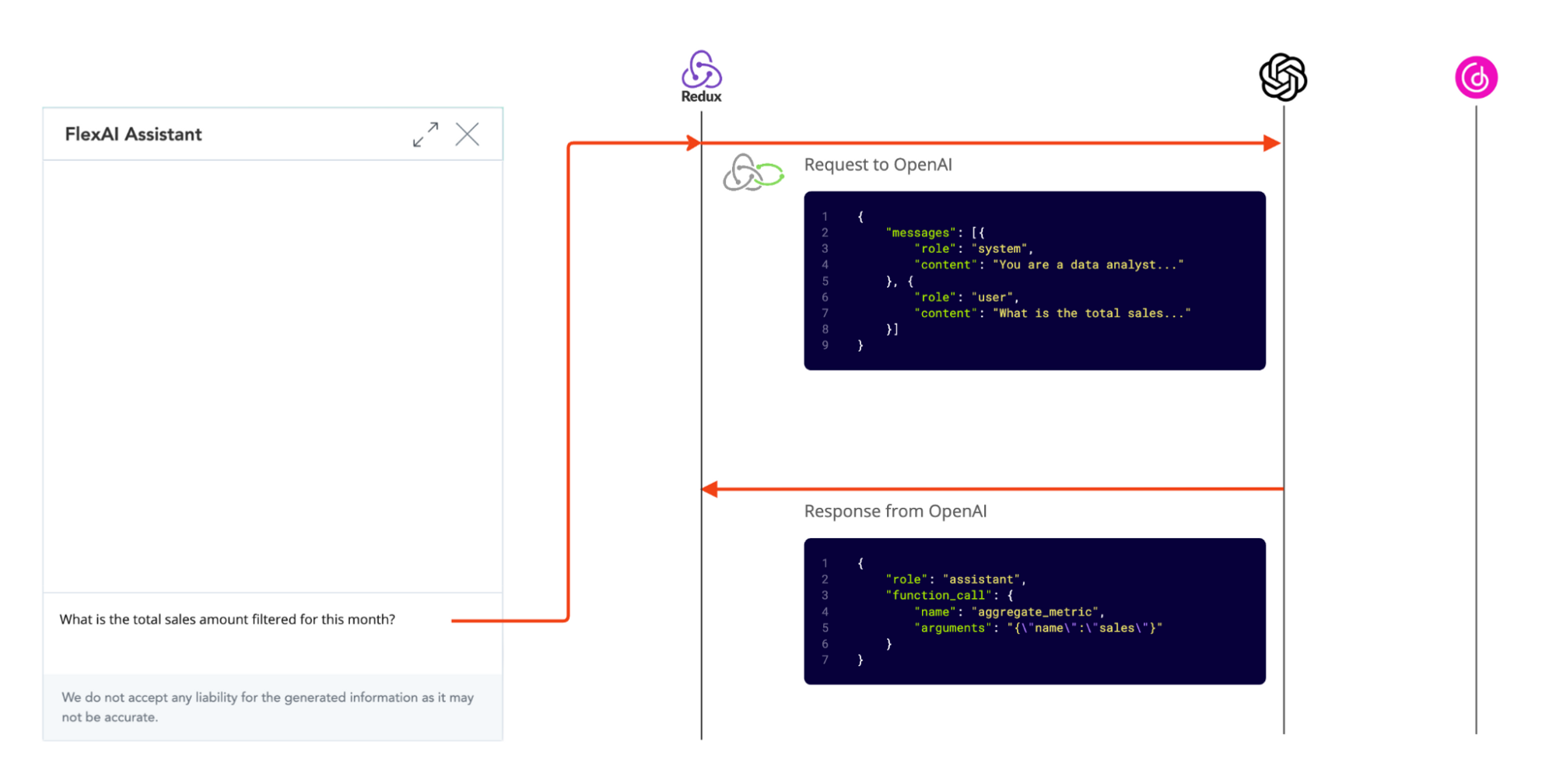

Step 1: Taking the order

All of it begins with the consumer asking a query. A brand new message is added to the shop and a middleware is triggered to ship the entire messages stack to the OpenAI server.

Upon receiving the response from LLM we put it to the shop as a brand new message.

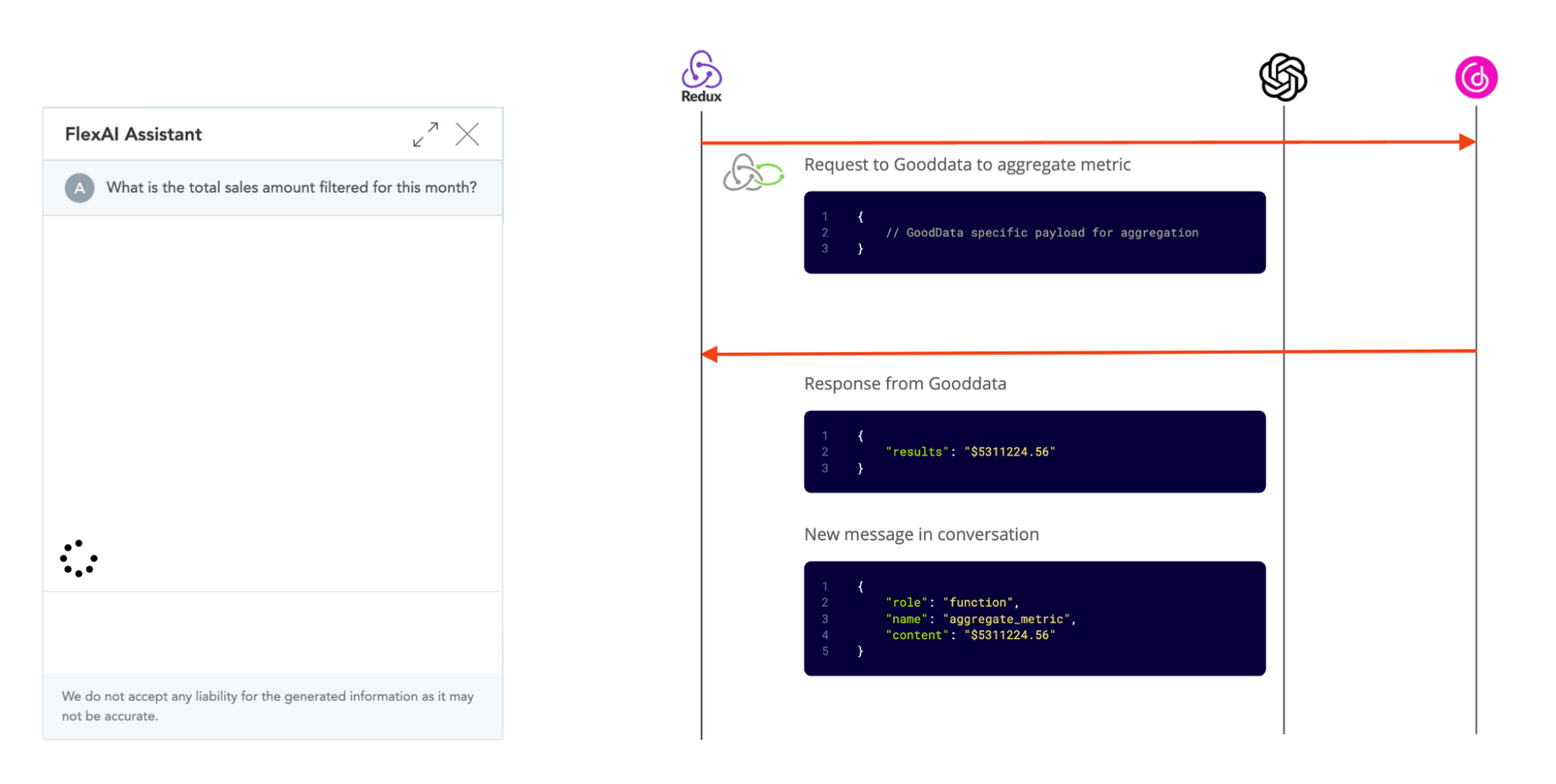

Step 2. Cooking the analytics

Now, let’s assume a extra complicated state of affairs – OpenAI has deduced that the consumer needs to see the aggregated metric. The language mannequin can’t probably know the reply to that, so it asks for a operate name from our app.

One other middleware is triggered this time – the one which’s listening to the operate calls. It executes the operate and provides the consequence again to the shop, as a brand new message.

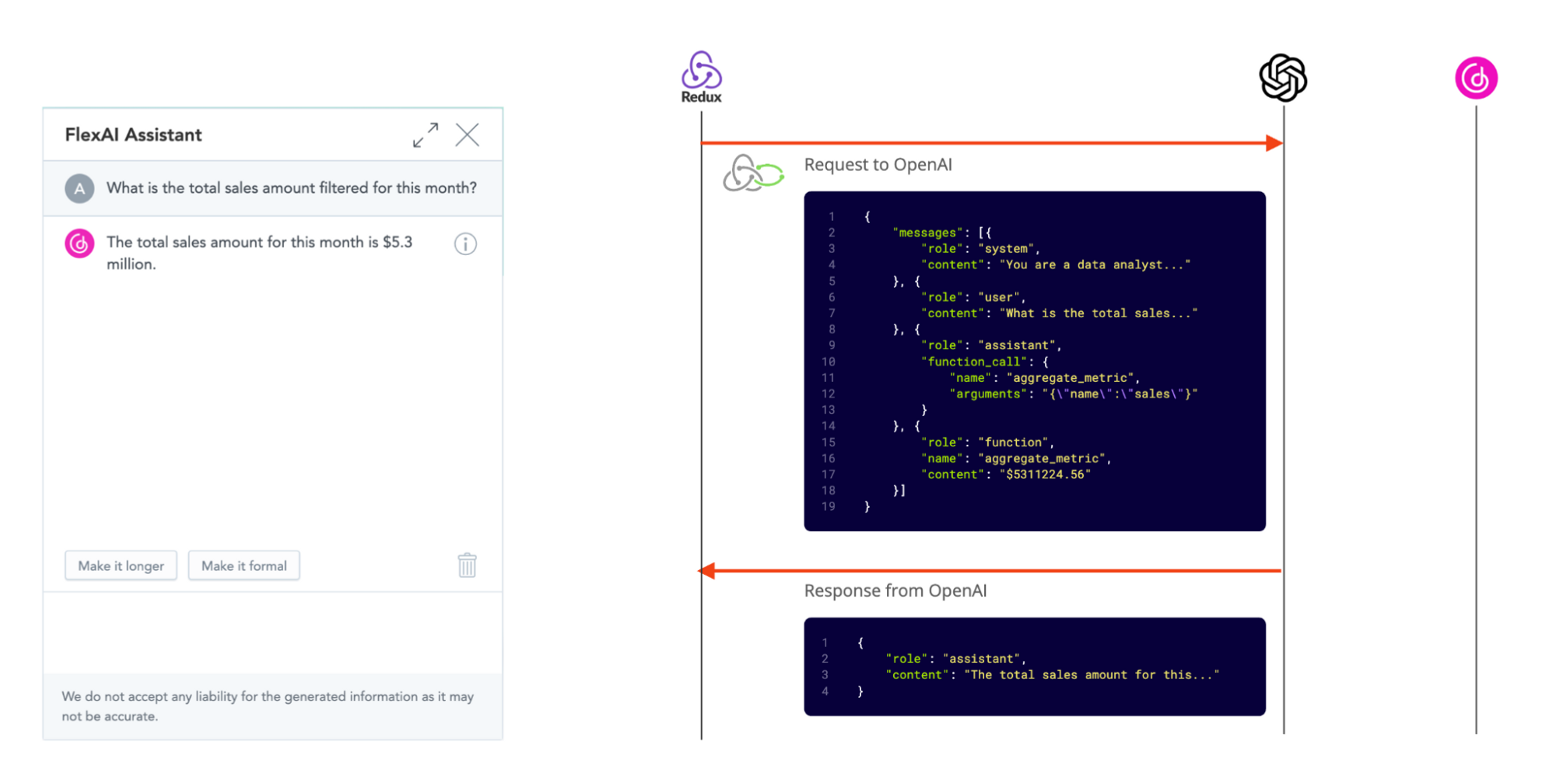

Step 3. Serving the consumer

Lastly, the middleware sends the operate consequence again to the OpenAI server, together with a whole dialog historical past. We are able to’t ship simply the final message, as OpenAI wouldn’t have the mandatory context to even know what the consumer’s query was. The API is stateless, in any case. This time the assistant response is textual and doesn’t set off any middleware.

In the meantime our frontend is displaying solely the messages that make sense to the consumer, i.e. consumer message itself and the textual response from the assistant. It might probably additionally derive the loading state proper from the message stack – if the final message in stack will not be a textual response to the consumer – we’re within the loading state.

Are we there but?

There may be an previous joke amongst UX folks concerning the good consumer interface. It ought to appear to be this:

A single enormous inexperienced button that guesses and does precisely what the consumer needs. Sadly, we’re not fairly there but. Generative AI will not be a silver bullet that may change the consumer interface as we all know it, as a substitute, it’s a helpful and highly effective addition to frontend engineer’s toolset. Listed below are just a few sensible items of recommendation on learn how to use this new instrument.

Choose the use instances rigorously

Typically it’s nonetheless simpler to simply press just a few buttons and test just a few checkboxes. Don’t drive the consumer to speak to AI.

A great software for the generative AI – a comparatively easy, however tedious and boring activity.

Don’t attempt to change the consumer

Don’t make choices or take actions on the consumer’s behalf. As a substitute, counsel an answer and let the consumer affirm any motion explicitly. GenAI nonetheless makes errors very often and wishes supervision.

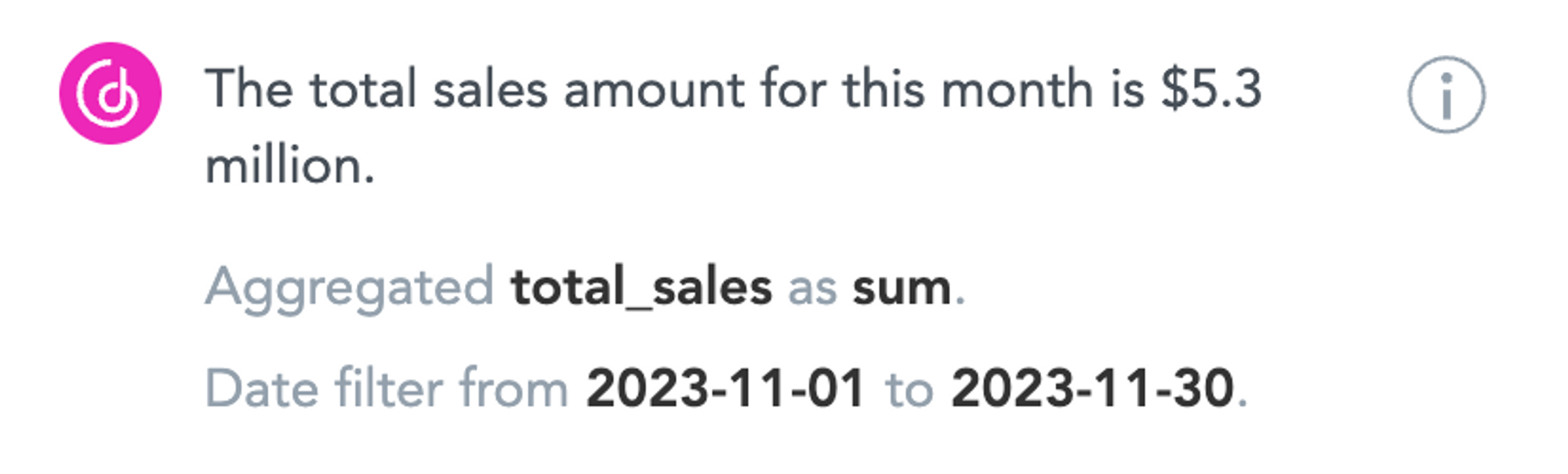

It’s additionally a good suggestion to offer the consumer with a method to rapidly validate the AI assistant response. Ideally, with out leaving the chat interface. We’ve solved it by including an “clarify” operate that tells the consumer which actual metric and over what dimensions did we use to calculate the aggregation.

Hold it easy

A couple of easy devoted assistants in strategic locations of the app is healthier than one “do all of it” chat bot. This implies every assistant may have much less background context to take note of, much less features that it could actually select to name and in the end will make much less errors, will run sooner and will likely be cheaper to function.

Conclusions

These are thrilling occasions to be a front-end engineer. Our colleagues from AI created an impressive piece of expertise and it’s now as much as us to cook-up some pleasant treats for our customers.

Wish to learn extra concerning the experiments we’re operating at GoodData? Take a look at our Labs atmosphere documentation.

Wish to enter the take a look at kitchen your self? Join our GoodData Labs atmosphere waitlist.

Wish to speak to an skilled? Join with us by means of our Slack neighborhood.

Why not attempt our 30-day free trial?

Totally managed, API-first analytics platform. Get prompt entry — no set up or bank card required.