This text kicks off a collection about our Analytics Stack (FlexQuery) and the Longbow engine powering it.

Have you ever ever applied a bunch of knowledge companies interacting with shoppers and speaking with one another? Has upkeep turn into an enormous burden? Do you must write boilerplate too usually? Is efficiency a priority? Or, have you ever ever wanted to embed a customized information service right into a third-party platform?

Think about you implement a pleasant prediction algorithm and also you seamlessly embed it to a BI platform so finish customers can devour it. Sci-fi? Properly, for us it’s not!

Constructing and sustaining information companies, particularly for analytics platforms, is commonly difficult – from efficiency points to advanced integrations with third-party platforms. To deal with these, we created a framework designed to considerably enhance developer effectivity and ease the combination of knowledge companies, leveraging cutting-edge applied sciences like Apache Arrow for maximal efficiency and reliability. We name it Longbow and it powers the center of our Analytics Stack – FlexQuery.

Our journey, led by Lubomir (lupko) Slivka, goals to revolutionize GoodData’s analytics choices, remodeling our conventional BI platform into a strong Analytics Lake. This transformation was motivated by the necessity to modernize our stack, taking full benefit of open-source applied sciences and fashionable architectural rules to higher combine with cloud platforms.

Constructing Information Providers is Exhausting!

Think about you may have determined to construct a stack powering an analytics (BI) platform. You have to implement varied information companies for primary analytics use instances corresponding to pivoting, machine studying, or caching.

All companies share frequent wants, like:

- Config administration

- Routing, Load balancing

- Deployment flexibility, horizontal scaling

- Multi-tenancy, useful resource limits

Ideally, you want to clone a skeleton information service and implement solely the service logic, e.g. pivoting, with out dealing with the above. In the end you want to enable third events to implement and embed their customized companies. And that’s the place we at GoodData presently are going and we need to supply it to exterior audiences.

Our Preliminary Motivation – GoodData 2.0

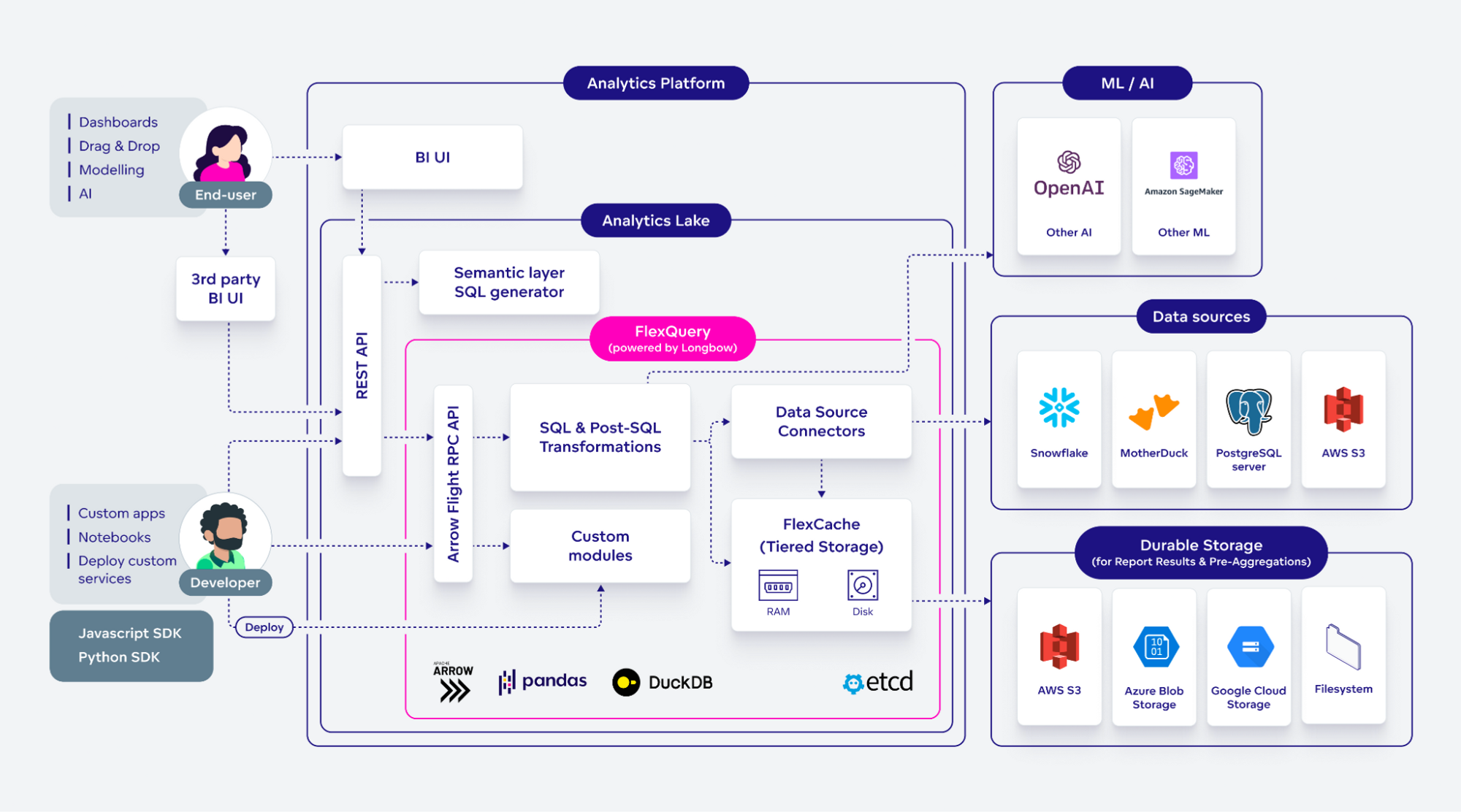

At GoodData, we construct a Enterprise Intelligence and Analytics platform. The center of our platform is a set of companies we name Analytics Lake – a set of elements and companies which are chargeable for determining what to compute, question the information sources, post-process and presumably cache the outcomes, and eventually serve the outcomes to shoppers.

We constructed the primary model of our platform greater than fifteen years in the past, with the know-how accessible at the moment; we mixed it with massive quantities of our personal proprietary code.

As we launched into constructing our new GoodData Cloud answer (you might name it GoodData 2.0), we determined to rebuild and refresh our somewhat-outdated stack in order that it takes benefit of the accessible open-source applied sciences, and fashionable architectural approaches and may match nicely into public cloud platforms.

Very early in our new journey, we found the Apache Arrow venture and realized that it supplies a really sturdy basis that we might use when constructing our new stack. In a while, we acquired excited concerning the DuckDB database and partnered with MotherDuck.

We additionally realized that there’s demand from our prospects to embed customized companies and that’s the reason, from the very starting, we had this use case in thoughts whereas designing the structure.

Anatomy of an Analytics Lake

GoodData strongly believes in semantic modeling for BI and analytics – and our new stack adopted this mindset as nicely. This layer acts as an indirection between two key elements:

- The logical mannequin used for creating and consuming analytics options.

- The bodily mannequin of the particular information that analytics are derived from.

We frequently examine this to Object-Relational Mapping (ORM) in programming – the place the object mannequin is mapped to a bodily relational mannequin.

To supply this indirection, our stack features a specialised question engine, which is constructed upon the Apache Calcite venture. This engine performs a vital function in translating the semantic mannequin and consumer requests right into a bodily execution plan.

Nevertheless, how does one notice such a bodily execution plan? Properly, that’s the place our brand-new engine (Longbow), comes into play. It powers the Analytics Stack we name FlexQuery, which is chargeable for:

- Information sources querying and pre-aggregations: Executing queries and getting ready information.

- Information post-processing: Enhancing information by pivoting, sorting, merging, or making use of ML.

- Caching: Storing outcomes and pre-aggregations to scale back latency.

And that is the place the Apache Arrow and different tasks within the open-source information and analytics ecosystem come into play.

Why we selected the Apache Arrow

Often, once we point out the ‘Arrow’ to somebody, they know concerning the excellent information format for analytics as a result of it’s columnar and in-memory.

In reality, the Apache Arrow venture is method larger and extra sturdy than ‘simply’ the information format itself and provides amongst others:

- Environment friendly I/O operations for file methods like S3 or GCS.

- Converters between varied codecs, together with CSV and Parquet.

- JDBC consequence set converters.

- Streaming computation capabilities with the Acero engine.

- Flight RPC for creating information companies.

- Arrow Database Connectivity (ADBC) for standardized database interactions.

So in a nutshell, going with Arrow in our analytics stack permits us to streamline lower-level technicalities, enabling us to deal with delivering our added worth.

Now, the place issues get much more fascinating is integration with different open-source applied sciences additional enriching our stack’s capabilities:

- Pandas: Utilized for information processing and exporting (with native Arrow information assist). We’re additionally exploring alternate options like Polars.

- DuckDB: An in-process OLAP database for SQL queries on pre-aggregations.

- turbodbc: Gives environment friendly Arrow format consequence units.

All of them have very environment friendly integration with Arrow and may work with the Arrow information nearly seamlessly, with out an excessive amount of friction.

What we got down to construct

Our choice to develop our stack round Arrow Flight RPC led us to construct it as a collection of knowledge companies tailor-made to particular wants:

- Information Querying Providers: For accessing varied information sources.

- Put up-Processing Providers: Using dataframe operations to refine queried outcomes.

- Caching Providers: For storing each outcomes and intermediate information.

We anticipated the longer term addition of companies for storing pre-aggregations and using SQL for queries on cached or pre-aggregated information.

Arrow Flight RPC permits our analytics stack’s ‘bodily’ elements to reveal a constant API for higher-level elements, such because the question engine and orchestrators, enhancing the performance and worth supplied.

The thought is, that if we deal with creating well-granulated companies with well-defined tasks that, regardless of preliminary price, it can pay out in reusability and flexibility. This design precept helps the introduction of latest product options by the orchestration layer’s interplay with varied information companies.

As our design developed, we noticed alternatives to spice up the system cohesion by Arrow Flight RPC, enabling companies to cooperate on superior features like clear caching.

This flexibility additionally means that you’ve got extra methods to design companies. Let’s showcase this on two approaches to caching:

- Orchestrating calls: You orchestrate information service calls (e.g., pivoting) with a cache service name. Sometimes, generally, you’ll chain a cache service name as a result of in analytics most information companies do the heavy lifting. So it is sensible to cache most outcomes.

- Reusable service template: You can even outline the caching within the template, so when a developer calls an information service (e.g., an SQL execution), the caching is managed transparently beneath the hood – if the result’s already cached, it’s reused; in any other case, the information supply is queried.

Clearly, within the case of caching, we opted for the second choice. The fantastic thing about the FlexQuery ecosystem is its flexibility. So you possibly can design in each methods primarily based on the use case. And in case you change your thoughts, you possibly can effortlessly migrate from one strategy to a different.

So in the long run, we set out on the trail to construct a cohesive system of knowledge companies that implement the Arrow Flight RPC and supply foundational capabilities in our stack.

From Builders to Builders

Developer velocity is essential. I imply – you possibly can present a most refined platform to builders but when it takes months to onboard and weeks to construct a brand new easy information service, it’s ineffective.

That’s why we centered on this facet and supplied a skeleton module, which builders can clone and develop solely the logic of the information service itself, with none boiler-plating.

Now, think about you could execute customized (analytics) SQL queries on prime of a number of information sources. Sounds arduous? That’s why we built-in DuckDB in-process OLAP engine into our information service framework.

What does our information service appear to be? Every service inside our FlexQuery ecosystem is designed to be versatile, accommodating varied information storage eventualities—whether or not you may have information in CSV recordsdata, databases, or already cached, our service is supplied to handle it effectively.

Let’s illustrate our dedication to facilitating easy and environment friendly growth processes inside our ecosystem.

To try this, let’s have a look at the method of executing the notorious TPCH question #4:

tpch_q4 = """

choose o_orderpriority, rely(*) as order_count

from orders

the place

o_orderdate >= date '1993-07-01'

and o_orderdate < date '1993-07-01' + interval '3' month

-- not part of TPCH however demonstrates multi-tenancy

and o_tenant="tenant1"

and exists (

choose 1 from lineitem

the place l_orderkey = o_orderkey and l_commitdate < l_receiptdate

)

group by o_orderpriority order by o_orderpriority

"""And now the execution:

import quiver_core.api as qc

from orjson import orjson

from quiver_connector import ConnectorQuery

from quiver_sql_query.service.sql_query import SqlQuery, TableData

tpch4_pqtrim = SqlQuery(

sql=tpch_q4,

tables=(

TableData(

table_name="orders",

information=ConnectorQuery(

payload=orjson.dumps(

{

"sort": "parquet-file", "path": "tpch/orders.parquet",

"columns": ["o_orderpriority", "o_orderdate", "o_orderkey"],

}

),

sink_method=qc.SinkToFlightPath(

flight_path="org1/tenant1/tpch/datasets/orders", skip_if_exists=True

),

).to_flight_descriptor(ds_id="my-files"),

),

TableData(

table_name="lineitem",

information=ConnectorQuery(

payload=orjson.dumps(

{

"sort": "parquet-file", "path": "tpch/lineitem.parquet",

"columns": ["l_orderkey", "l_commitdate", "l_receiptdate"],

}

),

).to_flight_descriptor(ds_id="my-files"),

),

),

sink_method=qc.SinkToFlightPath(

flight_path="org1/tenant1/tpch/stories/4.sql", skip_if_exists=True

),

)

q = qc.QuiverClient("grpc://localhost:16004")

with q.flight_descriptor(tpch4_pqtrim.to_flight_descriptor()) as stream:

consequence = stream.read_all()On this situation, we execute a question utilizing two parquet recordsdata from the ‘my-s3’ information supply, which references an AWS S3 bucket. This setup demonstrates the flexibleness of our system, as builders can designate distinct information sources for every TableData.

The fantastic thing about it boils down to a few factors:

- Decoupled Information Connectors: The TableData connectors are impartial of the SQL execution service, permitting you to “hot-swap” information sources.

- Environment friendly Caching: Each TableData and the question outcomes may be saved in FlexQuery’s caching system (FlexCache – Arrow format). The system intelligently reuses the cache when accessible, eliminating the necessity for guide developer intervention.

- Dynamic Information Dealing with: The system can trim columns on the fly and helps predicate pushdown for optimization.

And now let’s evaluation how such an information service is applied.The entry level of every service seems like this:

def create_task(

self, cmd_envelope: qc.FlightCmdEnvelope, headers: qm.FlightRpcHeaders

) -> Process[SqlQuery]:

sql_query = SqlQuery.from_bytes(cmd_envelope.cmd.payload)

return SqlQueryTask(internal_api=self, cmd=sql_query)The entry level returns an occasion of a category whose run technique implements the heavy lifting.

For the sake of simplicity, let’s get the DuckDB occasion in a service. Excited about creating it from scratch? Watch GoodData channels, we’re going to publish extra detailed articles!

acquire_start = time.perf_counter()

occasion = self._api.acquire_duckdb_instance(

timeout=self._task_config.acquire_duckdb_timeout

)

acquire_duration = time.perf_counter() - acquire_start

self._logger.debug("duckdb_acquired", period=acquire_duration)

SqlQueryModuleMetrics.ENGINE_ACQUIRE_DURATION.observe(acquire_duration)There’s an API for buying DuckDB cases, straightforward. We additionally present a system of metrics for good upkeep(at GoodData we populate them to Prometheus).

However how is TableData populated to the DuckDB?

poll_result = self._api.flight_io.poll_flight_info(

descriptor=self._descriptor,

timeout=self._task_config.gather_poll_timeout,

check_cancel_fun=_check_cancel_fun,

choices=self._call_options,

debug_name=debug_name,

)

flight_data = poll_result.do_get(pushdown)

with flight_data as reader:

cursor = occasion.cursor()

strive:

consequence = cursor.execute(

f'CREATE TABLE "{self._table_name}" AS SELECT * FROM reader'

).fetchone()

lastly:

cursor.shut()Have you ever observed how I talked about beginning/dealing with a server on this chapter? Load balancing? Scaling? No? That’s the great thing about FlexQuery. Inside builders deal with the logic of a brand new information service they need to embed. Exterior builders simply declare advanced requests even chaining a number of companies collectively and profit from outcomes.

Wish to study extra?

As we talked about within the introduction, that is the primary a part of a collection of articles, the place we take you on a journey of how we constructed our new analytics stack on prime of Apache Arrow and what we realized about it within the course of.

Different components of the collection are about placing the Longbow into the context of Flexquery, particulars concerning the versatile storage and caching, and final however not least, how good the DuckDB quacks with Apache Arrow!

As you possibly can see within the article, we’re opening our platform to an exterior viewers. We not solely use (and contribute to) state-of-the-art open-source tasks, however we additionally need to enable exterior builders to deploy their companies into our platform. In the end we’re interested by open supply the entire analytics stack. Would you be occupied with such open-sourcing? Tell us, your opinion issues!

When you’d like to debate our analytics stack (or the rest), be happy to hitch our Slack neighborhood!

Wish to see how nicely all of it works in follow, you possibly can strive the GoodData free trial! Or in case you’d wish to strive our new experimental options enabled by this new strategy (AI, Machine Studying and far more), be happy to join our Labs Atmosphere.