Life is stuffed with binary decisions — have that slice of pizza or skip it, carry an umbrella or take an opportunity. Some choices are easy, others unpredictable.

Predicting the suitable consequence requires knowledge and likelihood. These are expertise that computer systems excel at. Since computer systems converse in binary, they’re nice at fixing yes-or-no issues.

That’s the place machine studying software program, particularly the logistic regression algorithm, is available in. It helps predict the probability of occasions primarily based on historic knowledge, like whether or not it’ll rain at this time or if a buyer will make a purchase order.

What’s logistic regression?

Logistic regression is a statistical technique used to foretell the end result of a dependent variable primarily based on earlier observations. It is a kind of regression evaluation and is a generally used algorithm for fixing binary classification issues.

Regression evaluation is a predictive modeling approach that finds the connection between a dependent variable and a number of impartial variables.

For instance, time spent learning and time spent on Instagram (impartial variables) have an effect on grades (the dependent variable) — one positively, the opposite negatively.

Logistic regression builds on this idea to foretell binary outcomes, like whether or not you’ll move or fail a category. Whereas it will possibly deal with regression duties, it’s primarily used for classification issues.

As an illustration, logistic regression can predict whether or not a pupil shall be accepted right into a college primarily based on components comparable to SAT scores, GPA, and extracurricular actions. Utilizing previous knowledge, it classifies outcomes as “settle for” or “reject.”

Additionally identified as binomial logistic regression, it turns into multinomial when predicting greater than two outcomes. Borrowed from statistics, it’s one of the crucial broadly used binary classification algorithms in machine lincomes.

Logistic regression measures the connection between the dependent variable (the end result) and a number of impartial variables (the options). It estimates the likelihood of an occasion utilizing a logistic operate.

TL;DR: Every part you’ll want to find out about logistic regression

- What’s logistic regression? Logistic regression is a statistical algorithm used to foretell binary outcomes (e.g., sure/no, 0/1) primarily based on a number of enter variables.

- What does logistic regression do? It fashions the likelihood of a categorical dependent variable utilizing the logistic (sigmoid) operate and outputs class labels primarily based on a choice threshold.

- Why does logistic regression matter? It is broadly utilized in fields comparable to finance, healthcare, and advertising attributable to its simplicity, velocity, and interpretability in classification duties.

- What are the advantages of logistic regression? It supplies calibrated chances, is straightforward to implement, performs properly on small datasets, and helps uncover relationships between options.

- What forms of logistic regression are there? The primary varieties are binary logistic regression, multinomial logistic regression (for 3+ lessons), and ordinal logistic regression (for ordered lessons).

- What are one of the best practices for logistic regression? Guarantee a ample pattern dimension, verify for multicollinearity, use regularization to stop overfitting, and interpret coefficients by way of log-odds or odds ratios.

Linear regression vs. logistic regression

Whereas logistic regression predicts the specific variable for a number of impartial variables, linear regression predicts the continual variable. In different phrases, logistic regression supplies a continuing output, whereas linear regression gives a steady output.

Because the consequence is steady in linear regression, there are an infinite variety of potential values for the end result. However for logistic regression, the variety of potential consequence values is restricted.

In linear regression, the dependent and impartial variables needs to be linearly associated. Within the case of logistic regression, the impartial variables needs to be linearly associated to the log odds (log (p/(1-p)).

Tip: Logistic regression might be carried out in any programming language used for knowledge evaluation, comparable to R, Python, Java, and MATLAB.

Whereas linear regression is estimated utilizing the atypical least squares technique, logistic regression is estimated utilizing the utmost probability estimation strategy.

Each logistic and linear regression are supervised machine studying algorithms, and they’re the 2 major forms of regression evaluation. Whereas logistic regression is used to unravel classification issues, linear regression is primarily used for regression issues.

| Characteristic | Logistic Regression | Linear Regression |

| Output Kind | Categorical (0 or 1) | Steady (any quantity) |

| Algorithm Kind | Classification | Regression |

| Operate Used | Sigmoid (logistic) | Linear equation |

| Output Interpretation | Chance of sophistication | Predicted worth |

| Widespread Use Circumstances | Fraud detection, churn, spam | Gross sales forecasting, pricing, traits |

Returning to the instance of time spent learning, linear regression and logistic regression can be utilized to foretell completely different outcomes. Logistic regression may help predict whether or not the scholar handed an examination or not. In distinction, linear regression can predict the scholar’s rating.

Key phrases in logistic regression

The next are a number of the widespread phrases utilized in regression evaluation:

- Variable: Any quantity, attribute, or amount that may be measured or counted. Age, velocity, gender, and revenue are examples.

- Coefficient: A quantity, normally an integer, multiplied by the variable that it accompanies. For instance, in 12y, the quantity 12 is the coefficient.

- EXP: Brief type of exponential.

- Outliers: Information factors that considerably differ from the remaining.

- Estimator: An algorithm or components that generates estimates of parameters.

- Chi-squared check: Additionally referred to as the chi-square check, it is a speculation testing technique to verify whether or not the information is as anticipated.

- Commonplace error: The approximate normal deviation of a statistical pattern inhabitants.

- Regularization: A technique used for lowering the error and overfitting by becoming a operate (appropriately) on the coaching knowledge set.

- Multicollinearity: Incidence of intercorrelations between two or extra impartial variables.

- Goodness of match: Description of how properly a statistical mannequin suits a set of observations.

- Odds ratio: A measure of the power of affiliation between two occasions.

- Log-likelihood capabilities: Consider a statistical mannequin’s goodness of match.

- Hosmer–Lemeshow check: A check that assesses whether or not the noticed occasion charges match the anticipated occasion charges.

How logistic regression works

You’ll be able to consider logistic regression as a course of or pipeline:

Uncooked knowledge ➝ clear knowledge ➝ characteristic engineering ➝ mannequin coaching ➝ likelihood prediction ➝ sure/no output

Right here’s the way it works behind the scenes:

Step 1: Take the enter knowledge (impartial variables)

These components affect the end result, together with age, revenue, and time on website. In technical phrases, these are your impartial variables (usually labeled X₁, X₂, X₃, and so forth).

Step 2: Assign significance (coefficients or weights)

The mannequin learns the significance of every enter. It provides every one a weight — form of like saying, “This issue issues greater than that one.”

Step 3: Mix every little thing right into a single rating

The mannequin multiplies every enter by its weight and provides them collectively. This yields a rating, technically known as a “linear mixture.” Consider it like mixing substances to make a dish. The mannequin combines your inputs into one worth.

Step 4: Convert the rating to a likelihood (sigmoid operate)

The mixed rating could possibly be any quantity, constructive or damaging. However we wish a likelihood between 0 and 1. To realize this, logistic regression employs a particular components referred to as the logistic (sigmoid) operate, which compresses the quantity into a price comparable to 0.2 (low likelihood) or 0.9 (excessive likelihood).

Step 5: Make a prediction (sure or no)

Lastly, the mannequin attracts a line within the sand, normally at 0.5. If the likelihood is above 0.5, it predicts sure (e.g., the shopper will purchase). If it’s under 0.5, it predicts no.

This entire course of transforms uncooked knowledge into a transparent, interpretable end result — plus it provides you the likelihood behind the choice, so you know the way assured the mannequin is.

Now that you just perceive how logistic regression makes predictions, let’s discover the important thing assumptions that have to be met for it to work appropriately.

What are the important thing assumptions behind logistic regression?

When utilizing logistic regression, a number of assumptions are made. Assumptions are integral to appropriately use logistic regression for making predictions and fixing classification issues. The next are the principle assumptions:

- There may be little to no multicollinearity between the impartial variables.

- The impartial variables are linearly associated to the log odds (log (p/(1-p)).

- The dependent variable is dichotomous, that means it falls into two distinct classes. This is applicable solely to binary logistic regression, which is mentioned later.

- There aren’t any non-meaningful variables, as they could result in errors.

- The info pattern sizes are bigger, which is integral for higher outcomes.

- There aren’t any outliers.

What’s a logistic operate?

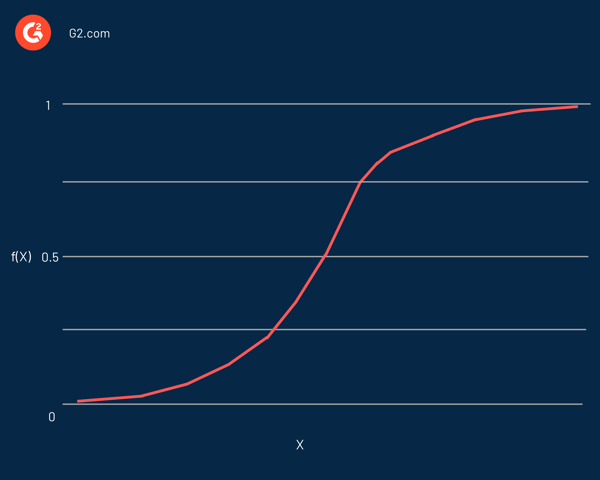

Logistic regression is known as after the operate used at its coronary heart, the logistic operate. Statisticians initially used it to explain the properties of inhabitants development. The sigmoid operate and the logit operate are some variations of the logistic operate. The logit operate is the inverse of the usual logistic operate.

In impact, it is an S-shaped curve able to mapping any actual quantity into a price between 0 and 1, however by no means exactly at these limits. It is represented by the equation:

f(x) = L / 1 + e^-k(x – x0)

On this equation:

- f(X) is the output of the operate

- L is the curve’s most worth

- e is the bottom of the pure logarithms

- ok is the steepness of the curve

- x is the true quantity

- x0 is the x worth of the sigmoid midpoint

If the anticipated worth is a substantial damaging worth, it is thought-about near zero. Then again, if the anticipated worth is a major constructive worth, it is thought-about shut to at least one.

Logistic regression is represented equally to linear regression, outlined utilizing the equation of a straight line. A notable distinction from linear regression is that the output shall be a binary worth (0 or 1) fairly than a numerical worth.

Right here’s an instance of a logistic regression equation:

y = e^(b0 + b1*x) / (1 + e^(b0 + b1*x))

On this equation:

- y is the anticipated worth (or the output)

- b0 is the bias (or the intercept time period)

- b1 is the coefficient for the enter

- x is the predictor variable (or the enter)

The dependent variable usually follows the Bernoulli distribution. The values of the coefficients are estimated utilizing most probability estimation (MLE), gradient descent, and stochastic gradient descent.

As with different classification algorithms, just like the k-nearest neighbors, a confusion matrix is used to guage the accuracy of the logistic regression algorithm.

Do you know? Logistic regression is part of a bigger household of generalized linear fashions (GLMs).

Identical to evaluating the efficiency of a classifier, it is equally vital to know why the mannequin labeled an statement in a specific means. In different phrases, we want the classifier’s resolution to be interpretable.

Though interpretability is not simple to outline, its major intent is that people ought to know why an algorithm made a specific resolution. Within the case of logistic regression, it may be mixed with statistical assessments, such because the Wald check or the probability ratio check, for enhanced interpretability.

What are the forms of logistic regression?

Logistic regression might be divided into differing types primarily based on the variety of outcomes or classes of the dependent variable.

After we consider logistic regression, we most likely consider binary logistic regression. All through most of this text, after we referred to logistic regression, we had been particularly referring to binary logistic regression.

The next are the three major forms of logistic regression.

Binary logistic regression

Binary logistic regression is probably the most generally used type of logistic regression. It fashions the likelihood of a binary consequence — that’s, an consequence that may tackle solely two potential values, comparable to “sure” or “no,” “spam” or “not spam,” “move” or “fail,” and “0” or “1.” The algorithm estimates the probability {that a} sure enter belongs to one of many two lessons, primarily based on a number of predictor variables.

This technique is broadly used throughout industries:

- Healthcare: Predicting whether or not a affected person has a illness (sure/no).

- Finance: Estimating mortgage default threat (default/no default).

- Advertising and marketing: Predicting whether or not a buyer will reply to a marketing campaign.

Mathematically, binary logistic regression calculates the log-odds of the dependent variable as a linear mixture of the impartial variables. This log-odds is then remodeled right into a likelihood utilizing the logistic (sigmoid) function.

Binary logistic regression is right when:

- The goal variable is categorical with two ranges.

- Predictor variables might be steady, categorical, or a mixture.

- Interpretability and likelihood outputs are vital.

Instance: A spam classifier that takes options like electronic mail size, key phrase frequency, and sender status and outputs a likelihood of the message being spam.

Multinomial logistic regression

Multinomial logistic regression is an extension of binary logistic regression used when the dependent variable has greater than two unordered classes. Not like binary logistic regression, the place the end result is both 0 or 1, multinomial regression handles three or extra nominal (non-ordinal) lessons.

Widespread examples embody:

- Retail: Predicting buyer alternative amongst product classes (e.g., shopping for sneakers, electronics, or clothes).

- Training: Figuring out a pupil’s alternative of main (e.g., STEM, Humanities, Enterprise).

- Healthcare: Classifying forms of illness (e.g., viral, bacterial, fungal).

The mannequin estimates the possibilities of every class relative to a reference class. This includes becoming separate binary logistic fashions for every class versus the reference class, then combining the outcomes to make a remaining prediction.

Multinomial logistic regression works finest when:

- The end result classes are nominal (no inherent order).

- The dataset has sufficient observations per class.

- Most probability estimation is possible.

It is usually extra computationally intensive than binary regression, however extremely helpful when there are a number of consequence decisions that don’t observe a pure order.

Ordinal logistic regression

Ordinal logistic regression, additionally referred to as ordinal regression, is used when the dependent variable consists of three or extra ordered classes. The classes have a significant order, however the spacing between them is unknown — for example, “Poor,” “Common,” “Good,” or buyer scores comparable to 1 to five stars.

Use instances embody:

- Surveys: Analyzing buyer satisfaction (Very Unhappy to Very Glad).

- HR: Assessing efficiency ranges (Under Expectations, Meets Expectations, Exceeds Expectations).

- Healthcare: Ranking symptom severity (Delicate, Reasonable, Extreme).

Not like multinomial logistic regression, ordinal regression assumes the proportional odds assumption, which signifies that the connection between every pair of consequence teams stays fixed. This assumption helps simplify the mannequin and interpretation of coefficients.

Ordinal logistic regression is suitable when:

- The end result variable is categorical with a pure order.

- The predictor variables might be categorical or steady.

- You need to protect ordinal construction fairly than flatten it into unrelated classes.

The mannequin calculates the log-odds of being at or under a sure class after which applies the logistic operate to compute cumulative chances. This makes it a robust device when each classification and rating are vital.

Do you know? An synthetic neural community (ANN) illustration might be seen as stacking collectively a lot of logistic regression classifiers.

When to make use of logistic regression?

Logistic regression is utilized to foretell the specific dependent variable. In different phrases, it is used when the prediction is categorical, for instance, sure or no, true or false, 0 or 1. The expected likelihood or output of logistic regression might be both of them, and there is not any center floor.

Within the case of predictor variables, they are often a part of any of the next classes:

- Steady knowledge: Information that may be measured on an infinite scale. It could possibly take any worth between two numbers. Examples are weight in kilos or temperature in Fahrenheit.

- Discrete, nominal knowledge: Information that matches into named classes. A fast instance is hair colour: blond, black, or brown.

- Discrete, ordinal knowledge: Information that matches into some type of order on a scale. An instance is score your satisfaction with a services or products on a scale of 1 to five.

Logistic regression evaluation is efficacious for predicting the probability of an occasion. It helps decide the possibilities between any two lessons.

In a nutshell, by historic knowledge, logistic regression can predict whether or not:

- An electronic mail is spam

- It’ll rain at this time

- A tumor is deadly

- A person will buy a automobile

- A web-based transaction is fraudulent

- A contestant will win an election

- A gaggle of customers will purchase a product

- An insurance coverage policyholder will expire earlier than the coverage time period expires

- A promotional electronic mail receiver is a responder or a non-responder

In essence, logistic regression helps remedy issues associated to likelihood and classification. In different phrases, you possibly can count on solely classification and likelihood outcomes from logistic regression.

For instance, it may be used to search out the likelihood of one thing being “true or false” and likewise for deciding between two outcomes like “sure or no”.

A logistic regression mannequin may assist classify knowledge for extract, remodel, and cargo (ETL) operations. Logistic regression should not be used if the variety of observations is lower than the variety of options. In any other case, it might result in overfitting.

High 3 statistical evaluation software program

These instruments may help you run logistic regression fashions, visualize outcomes, and interpret coefficients, all with out writing advanced code.

- IBM SPSS Statistics for advanced statistical knowledge evaluation in social sciences ($1069.2/12 months/consumer)

- SAS Viya for knowledge mining, predictive modeling, and machine studying (pricing accessible on request)

- Minitab Statistical Software program for high quality enchancment and academic functions ($1851/12 months/consumer)

*These statistical evaluation software program options are top-rated of their class, in line with G2’s Fall 2025 Grid Stories.

When to keep away from logistic regression?

Logistic regression is straightforward, quick, and straightforward to interpret, nevertheless it’s not at all times one of the best device. Listed below are conditions the place it would fall quick:

1. When the connection isn’t linear

Logistic regression assumes that the inputs relate linearly to the log-odds of the end result. If the precise relationship is advanced or nonlinear, the mannequin would possibly oversimplify and make inaccurate predictions.

2. With high-dimensional or small datasets

When you’ve got extra options (inputs) than observations (rows of knowledge), logistic regression can overfit — that means it performs properly on coaching knowledge however poorly on new knowledge. It wants an inexpensive variety of examples to generalize properly.

3. When options are extremely correlated

Multicollinearity (when two or extra options transfer in tandem) can compromise the mannequin’s capability to assign correct weights. It turns into laborious to know which variable is definitely influencing the end result.

4. When you’ll want to seize advanced patterns

Logistic regression creates straight-line (linear) resolution boundaries. In case your knowledge is difficult, fashions like:

- Choice Timber: for branching, rule-based choices

- Assist Vector Machines (SVMs): for separating advanced knowledge

- Neural Networks: for deep, layered studying

…could also be higher suited.

Backside line: Logistic regression is a good start line, nevertheless it’s not a one-size-fits-all resolution. Know its limits earlier than selecting it as your go-to mannequin.

What are some great benefits of logistic regression?

Lots of the benefits and drawbacks of the logistic regression mannequin apply to the linear regression mannequin. One of the crucial important benefits of the logistic regression mannequin is that it not solely classifies but in addition supplies chances.

The next are a few of some great benefits of the logistic regression algorithm.

- Easy to grasp, simple to implement, and environment friendly to coach

- Performs properly when the dataset is linearly separable

- Good accuracy for smaller datasets

- Does not make any assumptions concerning the distribution of lessons

- It gives the course of affiliation (constructive or damaging)

- Helpful to search out relationships between options

- Supplies well-calibrated chances

- Much less susceptible to overfitting in low-dimensional datasets

- Will be prolonged to multi-class classification

What are the widespread pitfalls in logistic regression learn how to keep away from them

Logistic regression is a good first step into machine studying — it’s easy, quick, and infrequently surprisingly efficient. However like several device, it has its quirks. Listed below are some widespread pitfalls freshmen run into, and what you are able to do about them:

1. Assuming it really works properly with non-linear knowledge

Logistic regression attracts straight-line (linear) boundaries between lessons. In case your knowledge has curves or advanced patterns, it might underperform. When you can strive remodeling options (e.g., log, polynomial), fashions like resolution timber or neural nets are higher for non-linear issues.

2. Utilizing it with high-dimensional knowledge and small datasets

When you’ve got extra options than observations, the mannequin would possibly overfit — that means it learns noise as a substitute of helpful patterns. This ends in poor efficiency on new knowledge. Use regularization (comparable to L1 or L2) or scale back the variety of options by means of characteristic choice or dimensionality discount.

3. Full separation of lessons

When one characteristic completely predicts the end result (e.g., all “sure” values have revenue > $100K), logistic regression struggles — the burden for that characteristic goes towards infinity. That is referred to as full separation, and the mannequin could fail to converge. Use regularization or Bayesian logistic regression to constrain the weights.

4. Ignoring multicollinearity

If two or extra enter options are extremely correlated, the mannequin can’t distinguish their particular person results clearly. This makes the coefficient estimates unstable and laborious to interpret. Examine for multicollinearity utilizing the Variance Inflation Issue (VIF) and drop or mix redundant options.

5. Delicate to outliers

Logistic regression might be thrown off by excessive values, particularly in small datasets. Outliers can disproportionately affect the weights, resulting in skewed predictions.Use sturdy scaling strategies, or take away/remodel excessive values.

6. Misinterpreting chances and coefficients

It’s simple to mistake log-odds for chances or learn coefficients as direct impacts on the output. Logistic regression coefficients have an effect on the log-odds, not the uncooked likelihood. Convert coefficients to odds ratios to grasp the course and power of impact.

Many of those pitfalls are simple to overlook once you’re beginning out. The excellent news? As soon as what to observe for, logistic regression turns into a dependable and interpretable workhorse for a lot of binary classification issues.

The best way to construct a logistic regression mannequin

In case you’re interested in how logistic regression works in follow, this is a beginner-friendly walkthrough of how a easy mannequin is constructed — from uncooked knowledge to prediction.

1. Information cleansing to repair lacking or messy knowledge

Earlier than anything, knowledge must be cleaned. This consists of:

- Eradicating lacking or incorrect values

- Standardizing codecs (e.g., “sure” vs. “YES” vs. “Y”)

- Making certain the end result variable is binary (0 or 1)

- Clear knowledge is important — even one of the best algorithm cannot repair dangerous enter.

2. Characteristic engineering to arrange inputs for the mannequin

Options are the inputs to your mannequin (like age, revenue, or buy historical past). Some could should be remodeled:

- Categorical variables like “nation” or “subscription kind” should be encoded into numbers

- Scaling could also be utilized to numerical variables in order that one characteristic would not overpower the others

3. Mannequin becoming to coach the algorithm

As soon as the information is prepared, you prepare (match) the mannequin to study patterns. This includes:

- Feeding the information to the logistic regression algorithm

- The mannequin calculates weights (coefficients) for every enter

- It makes use of these weights to estimate the likelihood of every consequence

4. Analysis to check how properly the mannequin performs

After coaching, we check how properly the mannequin performs. Widespread metrics embody:

- Accuracy: How usually it predicts appropriately

- Precision/Recall: How properly it handles sure/no instances

- Confusion matrix: A desk displaying right vs incorrect predictions

No should be a knowledge scientist to begin experimenting; instruments like Python’s scikit-learn make it simpler than ever to construct easy fashions.

Logistic regression: Often requested questions

Q: Is logistic regression supervised or unsupervised?

Logistic regression is a supervised studying algorithm. It learns from labeled coaching knowledge to categorise outcomes.

Q: What’s the distinction between logistic and linear regression?

Linear regression predicts steady outcomes (like worth), whereas logistic regression predicts binary outcomes (like sure/no).

Q: What are the assumptions of logistic regression?

The important thing assumptions embody the linearity of log-odds, the absence of multicollinearity, and a binary dependent variable (for binary logistic regression).

Q: Can logistic regression deal with greater than two lessons?

Sure, that’s referred to as multinomial or ordinal logistic regression, relying on whether or not the lessons have an order.

Q: How do I do know if logistic regression is the suitable alternative?

It’s very best when your goal variable is categorical (sure/no) and your knowledge meet assumptions comparable to linearity in log-odds and low multicollinearity.

When life provides you choices, use logistic regression

Some would possibly say life isn’t binary, however most of the time, it’s. Whether or not you are deciding to ship that electronic mail marketing campaign or skip dessert, many decisions boil right down to easy yes-or-no choices. That’s precisely the place logistic regression shines.

It helps us make sense of uncertainty by utilizing knowledge, fairly than counting on intestine intuition. From predicting buyer churn to flagging fraudulent transactions, logistic regression permits companies to make smarter, extra knowledgeable choices.

Uncover the prime predictive analytics instruments that simplify logistic regression and allow you to construct, prepare, and deploy prediction fashions in much less time.

This text was initially revealed in 2021. It has been up to date with new info.