On this article, I’ll take you on a journey of the previous 18 months of the event of our demo information pipeline.

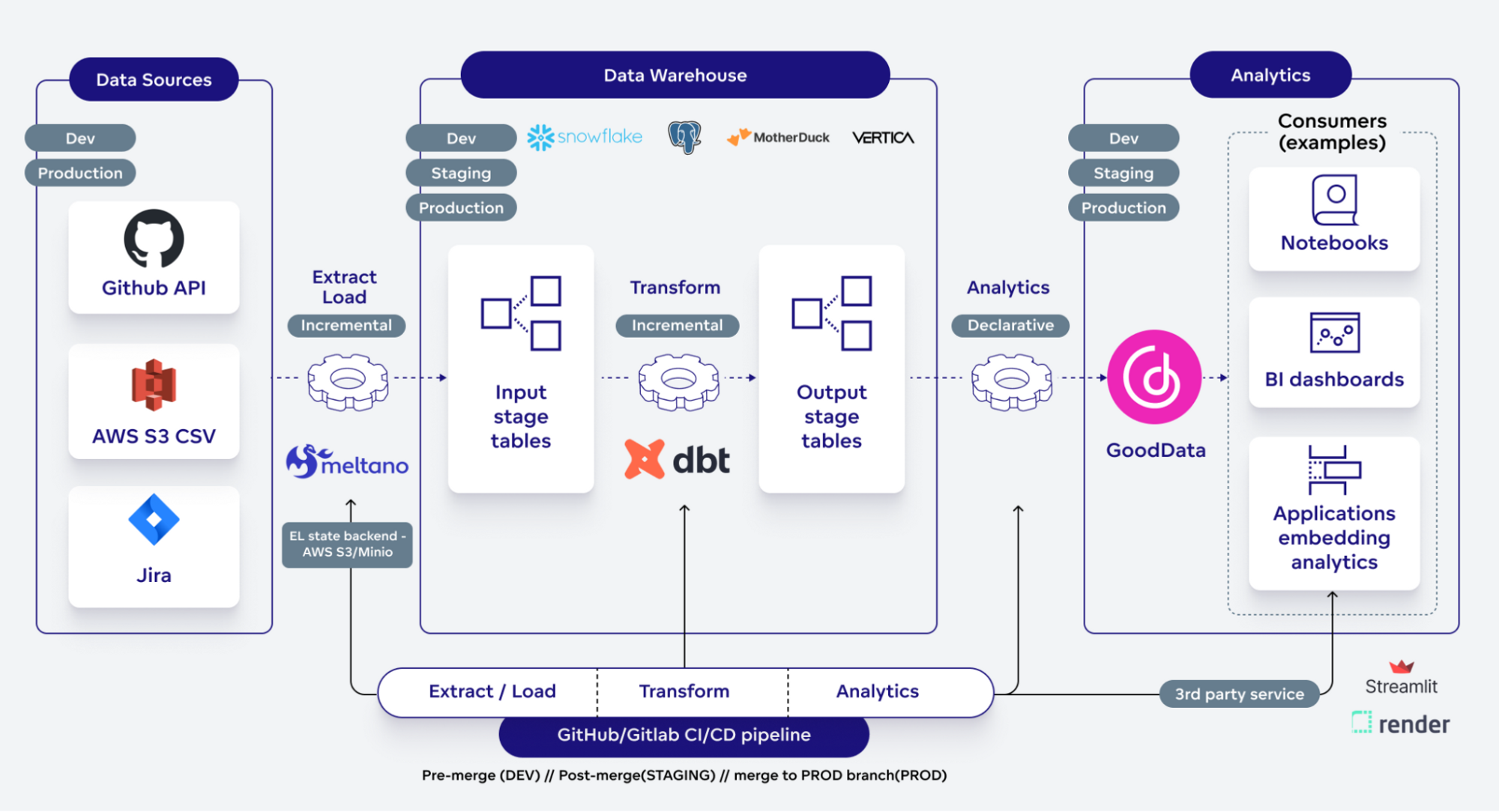

Every thing began when my colleague Patrik Braborec revealed the article How one can Automate Knowledge Analytics Utilizing CI/CD. Since then, I adopted up with two articles: Extending CI/CD information pipeline with Meltano and extra just lately with Prepared, set, combine: GoodData-dbt integration is production-ready! The information pipeline has matured for the reason that starting of this journey, so let me present you the present state of it. Let’s name it CI/CD Knowledge Pipeline Blueprint v1.0.

The pipeline follows these necessary ideas:

- Simplicity - Makes use of docker-compose up or Makefile targets for simple onboarding. Even a easy pull request can introduce a brand new information use case.

- Openness - Flexibility of swapping supply connectors or loaders (DWH).

- Consistency - A single pull request can replace every step: extract, load, remodel, analytics, and customized information apps.

- Security - Ensures gradual rollout in DEV, STAGING, and PROD environments, with in depth automated checks.

Within the article, we’ll:

- Deep dive into the above ideas.

- Exhibit end-to-end change administration on a specific pull request.

- Doc the constraints and potential follow-ups.

The blueprint supply code is open-sourced with the Apache license in this repository.

Be aware: a few of you might discover that the repository moved from GitLab to GitHub. I made a decision to offer a GitHub Actions different to GitLab CI. You’ll be able to look ahead to a follow-up article making a complete comparability of those two main distributors.

I am an enormous fan of easy onboarding – it is the guts of consumer/developer expertise! That’s why we have now two onboarding choices:

- Docker-compose

- Makefile targets

Go for the only route by operating “docker-compose up -d”. This command fires up all important long-term providers, adopted by executing the extract, load, remodel, and analytics processes throughout the native setup.

Alternatively, executing make <goal> instructions, like make extract_load, does the identical trick. This works throughout numerous environments – native or cloud-based.

To reinforce the onboarding additional, we offer a set of instance .env information for every atmosphere (native, dev, staging, prod). To modify environments, merely execute supply .env.xxx.

Now, you may marvel the place’s the pipeline. Nicely, I’ve obtained you lined with two exemplary pipeline setups:

Be happy to fork the repository and reuse the definition of the pipeline. Because it’s a set of simple YAML definitions, I consider everybody can perceive it and tweak it based mostly on his/her wants. I’ve personally put them to the take a look at with our workforce for inner analytics functions, and the outcomes had been spectacular! 😉

Openness

Meltano, seamlessly integrating Singer.io in the midst of the extract/load course of, revolutionizes switching each extractors(faucets) and loaders(targets). Fancy a distinct Salesforce extractor? Simply tweak 4 strains in meltano.yaml file, and voilá!

Wish to substitute Snowflake with MotherDuck on your warehouse tech? An analogous, hassle-free course of! And as a bonus, you possibly can run each choices side-by-side, permitting for an in depth comparability 😉

Some extractor/loader that does not fairly meet your wants? No sweat. Fork it, repair it, direct meltano.yaml to your model, and await its merge upstream.

What in case your information supply is exclusive, like for certainly one of our new purchasers with their APIs as the one supply? Nicely, right here you will get inventive! You may begin from scratch, or use the Meltano SDK to craft a brand new extractor in a fraction of the same old time. Now that’s what I’d name a productiveness enhance! 😉

However wait, there’s extra – what about information transformations? Databases have their very own SQL dialects, so when coping with lots of of SQL transformations, how are you going to swap dialects effortlessly?

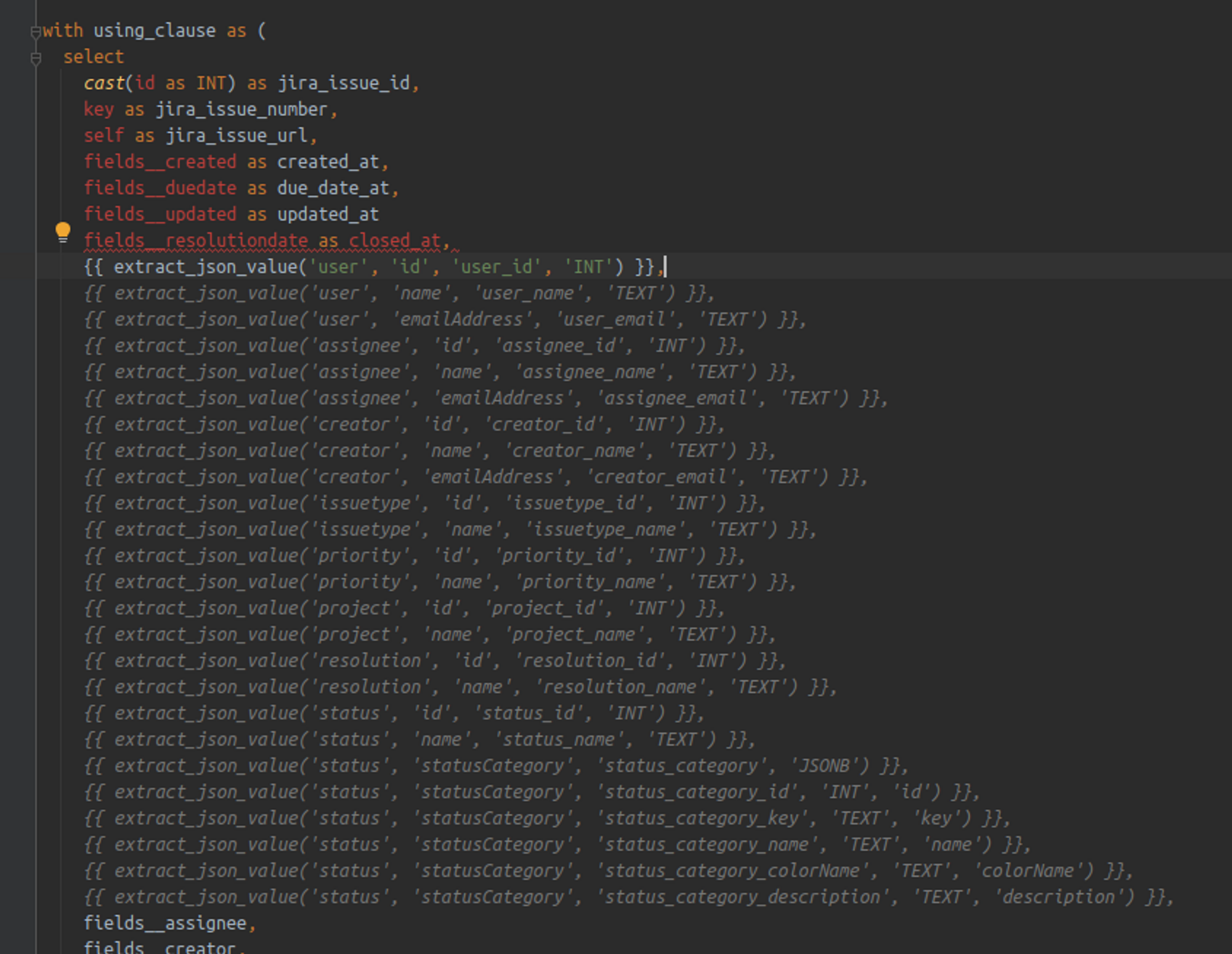

Enter dbt with their Jinja macros. Change particular instructions, like SYSDATE, with macros (e.g., {{ current_timestamp() }}). Keep in line with these macros, and altering database applied sciences turns into a breeze. And in case you want a macro that doesn’t exist but? Simply write your personal!

Right here is a fast instance of extracting values from JSON.

This flexibility provides one other immense profit – drastically decreasing vendor lock-in. Sad together with your cloud information warehouse supplier’s prices? A couple of strains of YAML are all it takes to transition to a brand new supplier.

Now onto GoodData

GoodData effortlessly connects with most databases and makes use of database-agnostic analytics language. So no must overhaul your metrics/studies with a ton of SQL rewrites; MAQL stays constant whatever the database.

And what in case you’re eyeing a swap from GoodData to a different BI platform? Whereas we’d like to preserve you with us, we’re fairly open on this case, no arduous emotions.😉

Firstly, we offer full entry by way of our APIs and SDKs. Wish to switch your information to a distinct BI platform utilizing, say, Python? No drawback!

Secondly, GoodData provides Headless BI. This implies, that you would be able to hyperlink different BI instruments to the GoodData semantic mannequin and carry out reporting by way of it. That is extremely helpful when the brand new BI instrument lacks a complicated semantic mannequin and also you wish to keep advanced metrics with out duplicities. It’s additionally very helpful for prolonged migrations or consolidating a number of BI instruments inside your organization. In brief… Headless BI is your pal!

On a private word, I am actively concerned in each the Meltano and dbt communities, contributing wherever I can, like rushing up Salesforce API discovery and including Vertica assist to Snowflake-labs/dbt constraints

Consistency is the spine of information pipeline administration.

Ever made a single change in an SQL transformation, just for it to unexpectedly wreak havoc throughout a number of dashboards? Yeah, effectively, I did. It’s a transparent reminder of how interconnected each component of an end-to-end pipeline really is. Guaranteeing constant supply of modifications all through the whole pipeline will not be a pleasant to have; it’s crucial.

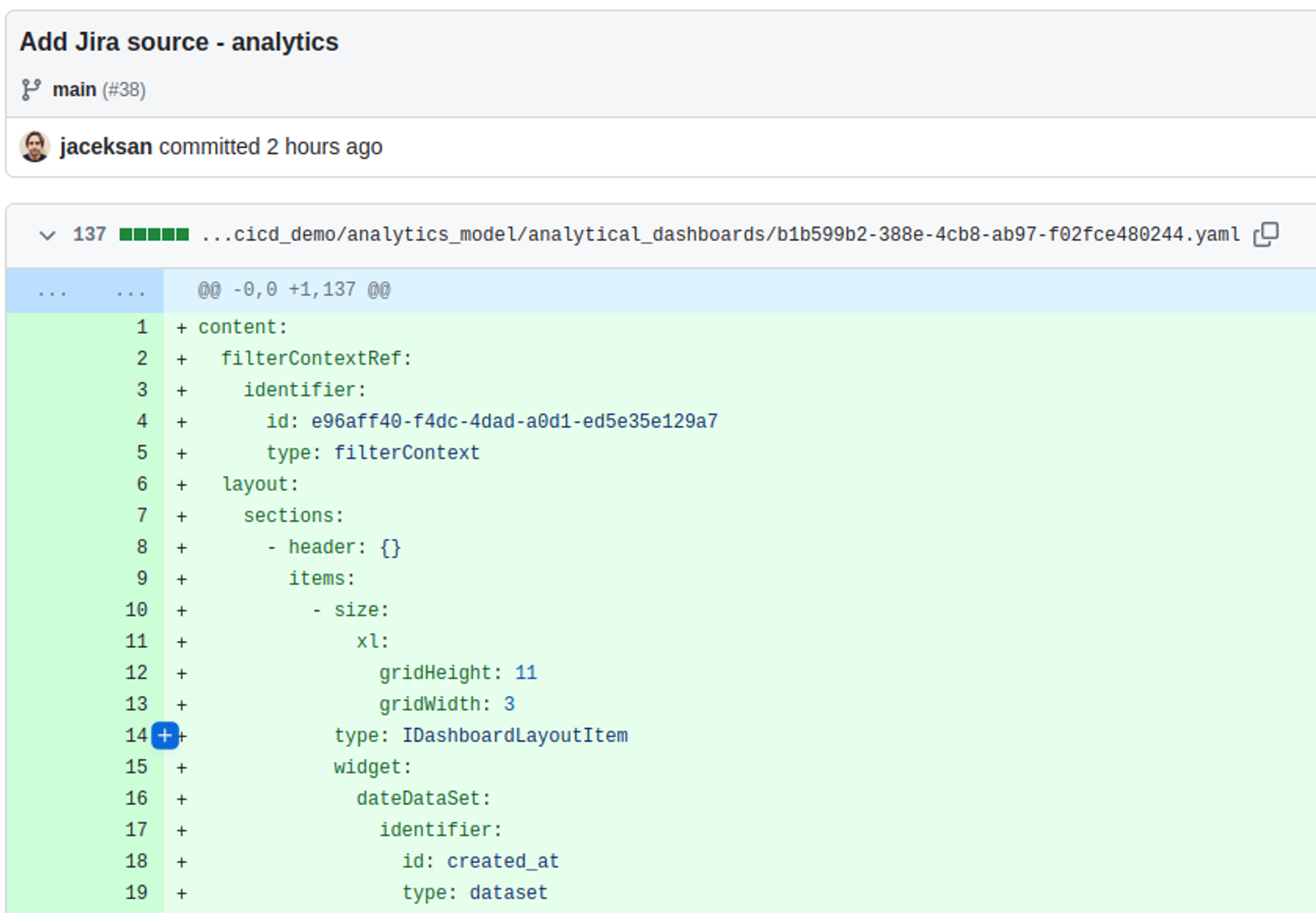

The go-to answer? Embedd all artifacts inside a model management system like git. It’s not solely about delivering the modifications persistently, it opens up a world of prospects, like:

- Upholding the ‘4 eyes’ precept with code opinions

- Monitoring and auditing modifications – know who did what

- The flexibility to easily roll again to any preview model if wanted

- Conduct thorough scans of the supply code for potential safety vulnerabilities.

Nevertheless, all instruments should assist the ‘as-code’ method. The flexibility to implement modifications by way of code, leveraging acceptable APIs, is vital. Instruments that solely depend on a UI expertise for enterprise customers don’t fairly make the lower right here.

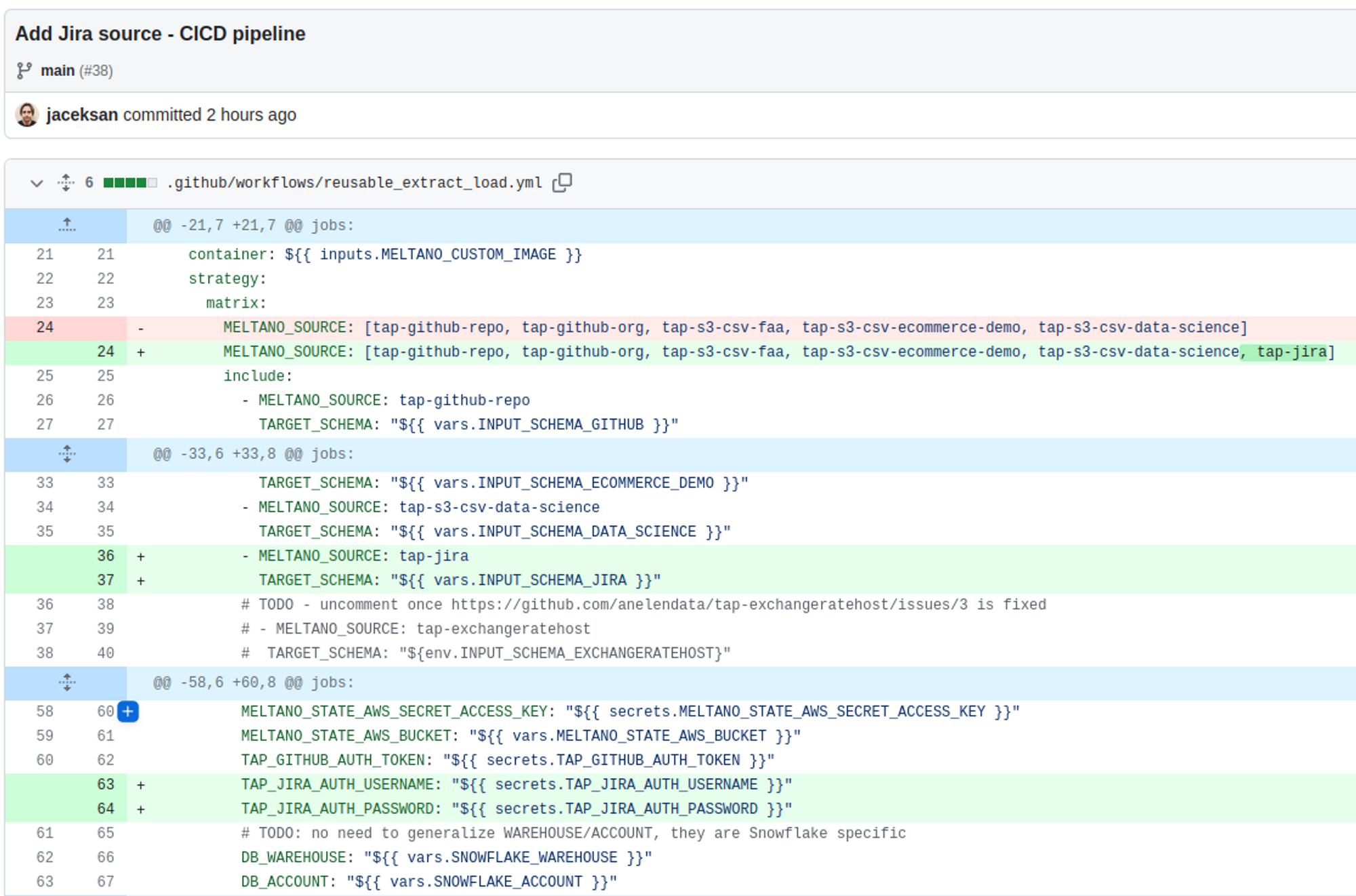

To present you a style of how this works in apply, let’s check out a current Pull Request the place we launched a brand new supply – Jira.

I will dive into the specifics of this course of within the demo chapter beneath.

Productiveness thrives in a secure atmosphere. Think about being afraid to ship a change to your information pipeline since you don’t know what you possibly can break – a nightmare situation.

Firstly, as talked about within the consistency chapter, there’s important worth in with the ability to roll out a change end-to-end inside a single pull request.

That is a giant win, certainly! However that alone is not sufficient. How are you going to make sure your modifications will not disrupt the manufacturing atmosphere, doubtlessly even catching the CEO’s eye throughout their dashboard assessment?

Let’s take a look at my method based mostly on just a few key rules:

- Develop and take a look at every part domestically. Be it in opposition to a DEV cloud atmosphere or a very self-contained setup in your laptop computer.

- Pull Requests set off CI/CD that deploys to the DEV atmosphere. Right here, checks run robotically in an actual DEV setting. Code opinions are accomplished by a distinct set of eyes. The reviewer might manually take a look at the end-user expertise within the DEV atmosphere.

- Put up-merge proceeds to the STAGING atmosphere for additional automated testing. Enterprise end-users get an opportunity to check every part totally right here.

- Solely when all stakeholders are glad, the pull request advances to a particular PROD department. After merging, the modifications are reside within the PROD atmosphere.

- At any level on this course of, if points come up, a brand new pull request will be opened to deal with them.

However how can we guarantee every atmosphere runs in isolation? There are quite a few methods:

- For extract/load (Meltano) and remodel (dbt), guarantee they’re executed in opposition to reside environments.

- Implement dbt checks – they’re invaluable.

- Use the gooddata-dbt plugin CLI to run all outlined studies.

As you possibly can see, this technique of remoted environments, adhering to the four-eyes precept, the potential to check in reside settings, and extra all contribute to considerably decreasing dangers. In my view, this framework is a secure working atmosphere.

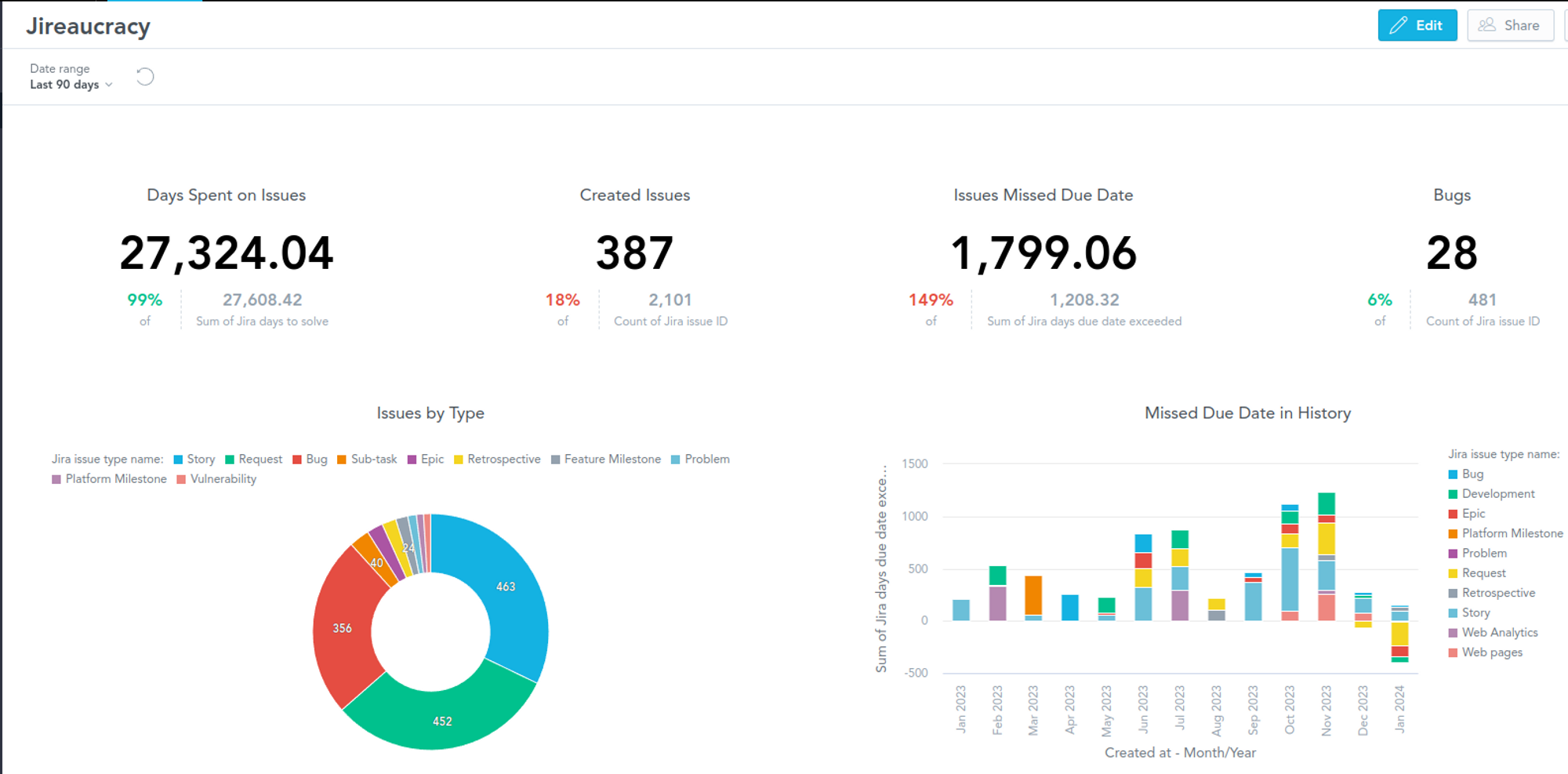

Not too long ago, in our firm, we have been battling with Jira tickets, resulting in a brand new time period – ‘Jireaucracy’. It is a mix of seriousness and humor, and we builders, effectively, we get pleasure from an excellent troll.

So, I went on a mission to crawl Jira information and put collectively the primary PoC for an information product. However right here’s the factor – integrating such a fancy supply as Jira may appear out of the scope of this text, proper? Jira’s information (area) mannequin is notoriously advanced and adaptable, and its API? Not precisely user-friendly. So, you may marvel, is it even doable to combine it (in a comparatively quick time) utilizing my blueprint?

Completely! 😉

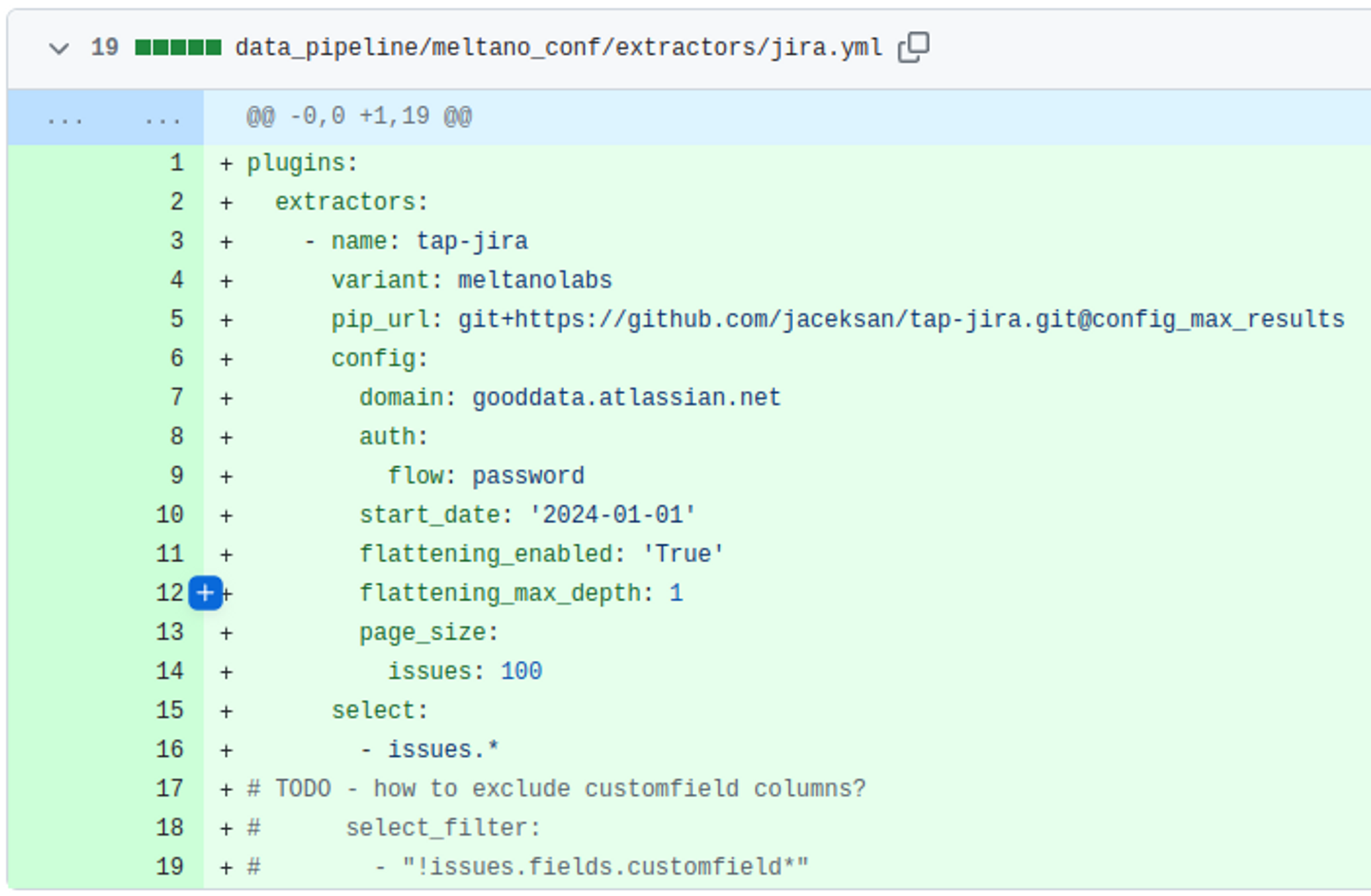

Enter the Meltano plugin tap-jira. I crafted a database-agnostic macro for extracting paths from JSON columns. We have got all of the instruments wanted to construct this!

Try the pull request. It’s laid out step-by-step(commit by commit) on your assessment. Permit me to information you thru it.

Including a brand new supply can’t be simpler!

I even carried out a lacking function to the tap-jira extractor – to permit configuring page_size for points stream. Now I redirected pip_url to my fork, however I created a pull request to the upstream repository and notified the Meltano group, so quickly I’ll redirect it again.

Replace after two days: My contribution has been efficiently merged into the primary mission! 🙂

The following step, including the associated transformation(s), will get sophisticated. However no worry – SQL is your pal right here. It’s doable to do it incrementally. I copy-pasted rather a lot from an present answer for GitHub. And guess what? Working more and more with GitHub Copilot on this repository, I barely needed to write any boilerplate code. Copilot was my trusty sidekick, suggesting most of it for me! 😀

The GoodData half? Piece of cake! The Logical Knowledge Mannequin is auto-generated by the gooddata-dbt plugin. I whipped up a brand new dashboard with six insights (that I consider are significant) in our dashboarding UI in only a few minutes. Then, I synced it as code (utilizing gooddata-dbt store_model) to the repository.

And eventually, let’s speak about extending the CICD pipeline. It was a stroll within the park, actually. Right here’s the pipeline run, which was triggered even earlier than the merge, permitting the code reviewer to click on by way of the consequence (dashboard).

From begin to end, constructing the whole answer took me roughly 4 hours. This included chatting on Meltano Slack about potential enhancements to the developer expertise.

Simply take into consideration the chances – what a workforce of engineers may create in a number of days or perhaps weeks!

P.S. Oh, and sure, I didn’t overlook to replace the documentation (and corrected just a few bugs from final time 😉).

Let’s face it: there isn’t any one-size-fits-all blueprint within the information world. This explicit blueprint shines in sure eventualities:

- Dealing with smaller information volumes, consider thousands and thousands of rows in your largest tables.

- Tasks the place superior orchestration, monitoring, and alerting aren’t crucial.

However what if you must scale up? Want to totally load billions of rows? Or handle hundreds of extracts/masses, perhaps per tenant, with diversified scheduling? If that is the case, this blueprint, sadly, may not be for you.

Additionally, it is price noting that end-to-end lineage does not get first-class remedy right here. A function I’m lacking is extra nuanced price management, like using serverless structure.

That’s why I plan to introduce an alternate blueprint, one I plan to develop and share with you all. Some ideas brewing in my thoughts:

- Enhancing Meltano’s efficiency. Whereas Singer.io, its center layer, provides the flexibleness to change extractors/loaders, it does come on the worth of efficiency. Is there a strategy to rework Singer.io to beat this? I am all in for contributing to such a enterprise!

- Exploring a extra strong different to Meltano. I am at present in talks with of us from Fivetran, however the quest for an open-source equal remains to be ongoing.

- Integrating a complicated orchestration instrument, ideally, one which champions end-to-end lineage together with monitoring and alerting capabilities. At present, I’ve Dagster on my radar.

And there you could have it – the primary blueprint is now in your arms. I really hope you discover worth in it and provides it a strive in your initiatives. You probably have any questions, please don’t hesitate to contact me or my colleagues. I additionally suggest becoming a member of our Slack group!

Keep tuned right here for extra and upcoming articles. I encourage you to become involved within the Meltano/dbt/Dagster/… open supply communities. Your contributions could make a major distinction. The affect of open supply is far-reaching – simply take into account how open-source Massive Language Fashions, that are on the coronary heart of at this time’s generative AIs, are making waves! Let’s be a part of this transformative journey collectively.

The open-source blueprint repository is right here – I like to recommend you to strive it with our free trial right here.

If you’re keen on making an attempt GoodData experimental options, please register for our Labs atmosphere right here.