Just lately I’ve created a dashboard about Pluto Day and on this article I want to stroll you thru the technical particulars of it. The PoC was constructed on prime of two of our Analytics as Code instruments: GoodData for VS Code, Python SDK On this article you’ll examine my PoC outcomes and could have a glimpse on what lies forward for GoodData’s Analytics as Code.

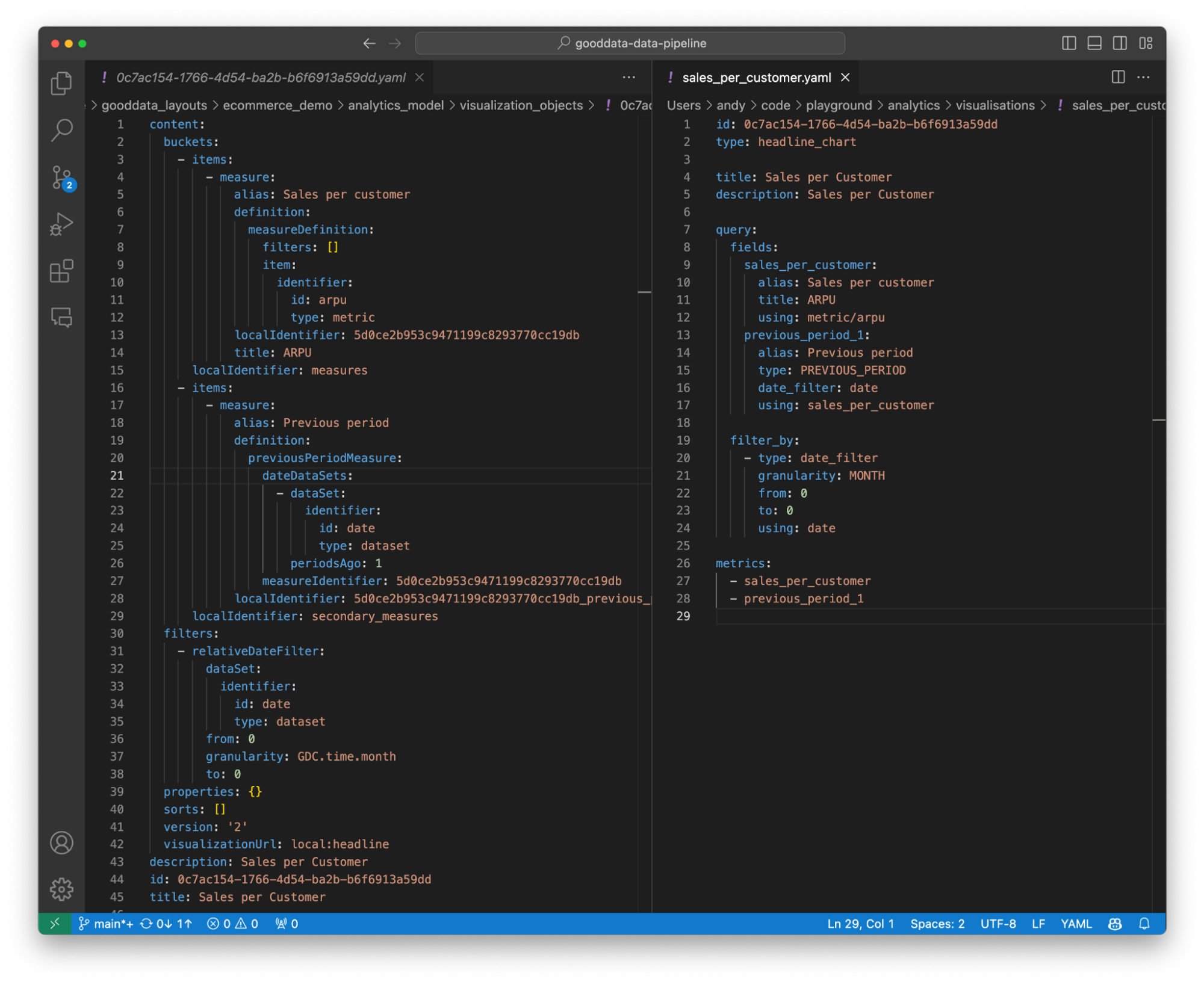

GoodData for VS Code has gone a great distance since our first beta launch final 12 months. Now you can outline a whole analytical layer of your knowledge product – from logical knowledge mannequin, to metrics, to visualizations, and even dashboards – all in code, with versioning, autocomplete, real-time static validation, and preview.

GoodData for VS Code is just not with out its limitations, although. It covers solely the analytical layer of a knowledge product , overlooking areas like consumer administration, workspace hierarchy, knowledge supply definition, and knowledge filters. And that’s the place GoodData Python SDK is available in to save lots of the day.

One would possibly marvel, why not use solely Python SDK, because it has Declarative (a.okay.a. Format) API, in any case? That API makes it tremendous simple to maneuver analytics between workspaces, propagate customers dynamically and usually deal with your undertaking lifecycle. Nevertheless, it doesn’t assist readability, not to mention writability of declarative YAML recordsdata.

On prime of developer comfort, GoodData for VS Code runs static validation for the entire workspace earlier than every deployment. This ensures that every one analytical objects, similar to your newest fancy chart, are checked for integrity, stopping points like referencing a metric your colleague might need eliminated in one other git department. This course of ensures your analytics are at all times correct and reliable – guaranteeing your customers can belief the info!

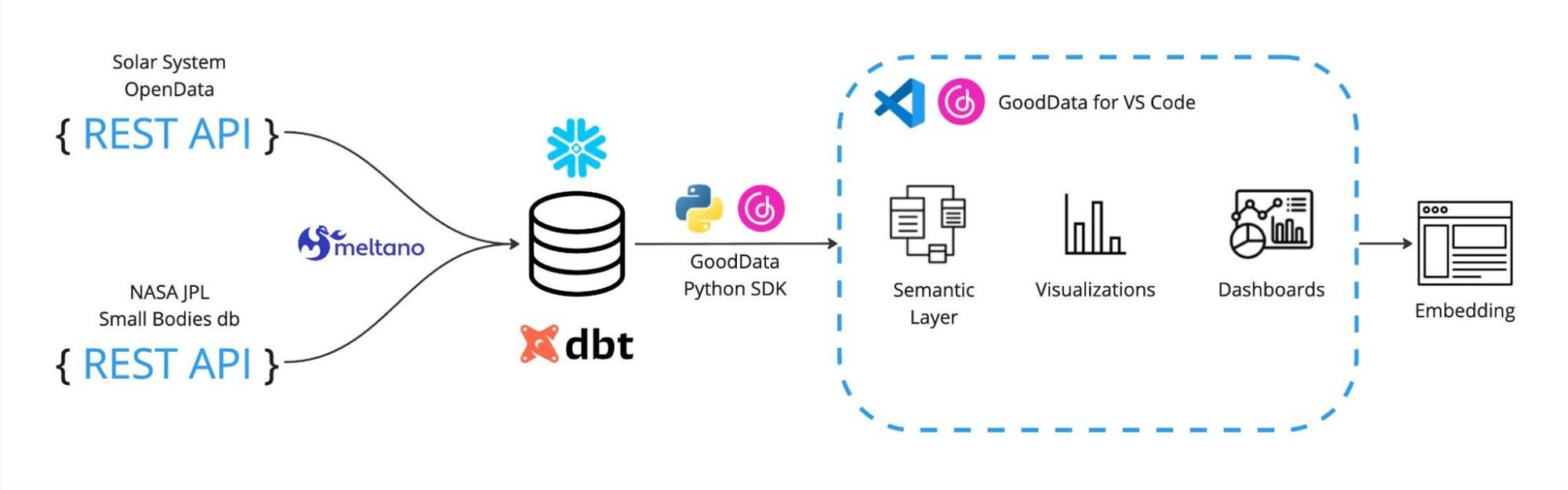

First, let’s set the scene. I used to be on the lookout for some juicy knowledge I may use to check our end-to-end knowledge pipeline with GoodData for VS Code. Then I remembered seeing the NASA JPL database on Photo voltaic System our bodies. Fortunately, Pluto Day was arising on February 18th, so the subject alternative was a no brainer. You possibly can learn an article I’ve ready about Pluto and our Photo voltaic System.

As a spine for the undertaking I’m utilizing Meltano. It simplifies knowledge fetching from REST API and executing subsequent transformations with dbt. GoodData for VS Code is used to outline visualizations and dashboards, whereas Python SDK helps with knowledge supply provisioning. Lastly, the general public demo is a straightforward static HTML web page, with dashboard embedded as GoodData’s WebComponents.

Knowledge ingestion

I’ve used two publicly obtainable REST APIs to fetch the info.

For giant celestial our bodies (the Solar, planets, dwarf planets and moons), I tapped into The Photo voltaic System OpenData web site. Its REST API is well-structured and fetching the info was a matter of configuring `tap-rest-api-msdk` in `meltano.yaml` and offering a JSON Schema.

For smaller celestial our bodies, I’ve used the aforementioned NASA JPL database. The setup was extra difficult right here; the info supplied by the API is just not Meltano-friendly. I needed to write a customized faucet to rework the obtained knowledge right into a extra digestible format.

// Knowledge from API

{

headers: ["id", "name", "mass", ...],

knowledge: [

["1", "Sun", "1.9891e30", ...],

["2", "Earth", "5.972e24", ...],

...

]

}

// Knowledge format I can put to database

[

{id: "1", name: "Sun", mass: "1.9891e30", ...},

{id: "2", name: "Earth", mass: "5.972e24", ...},

]

I’m additionally solely loading a small subset of NASA’s knowledge, roughly 136k information out of 1.3M. The subset meets my wants and contains solely the biggest asteroids and comets.

Each knowledge sources are saved into the identical Snowflake schema, so the entire knowledge ingestion boils all the way down to a single command:

meltano run tap-rest-api-msdk tap-sbdb target-snowflake

Knowledge transformation

Transformation is finished with dbt.

Giant our bodies transformation encompass:

- Changing the info sorts, e.g. parsing the date discipline.

- Changing to the identical models, e.g. mass in kg, semi-major orbital axes in astronomical models and many others.

- Filtering the info to incorporate solely stars, planets, dwarf planets, and moons.

- Grouping the objects into planetary programs, i.e. grouping collectively planets and their moons by assigning the identical attribute worth to the

planetary_systemdiscipline.

choose

l1.ID,

l1.BODYTYPE as TYPE,

l1.ENGLISHNAME as NAME,

l1.GRAVITY,

l1.DENSITY,

l1.AVGTEMP as AVG_TEMP,

coalesce(

try_to_date(l1.DISCOVERYDATE, 'DD/MM/YYYY'),

try_to_date(l1.DISCOVERYDATE, 'YYYY'),

try_to_date(l1.DISCOVERYDATE, '??/MM/YYYY')

) as DISCOVERY_DATE,

l1.DISCOVEREDBY as DISCOVERED_BY,

l1.MEANRADIUS as MEAN_RADIUS,

l1.SEMIMAJORAXIS * 6.68459e-9 as SEMIMAJOR_ORBIT,

coalesce(l1.MASS_MASSVALUE, 0) * pow(10, coalesce(l1.MASS_MASSEXPONENT, 6) - 6) as MASS,

coalesce(l1.VOL_VOLVALUE, 0) * pow(10, coalesce(l1.VOL_VOLEXPONENT, 0)) as VOLUME,

coalesce(l2.ENGLISHNAME, l1.ENGLISHNAME) as PLANETARY_SYSTEM

FROM ANDY.PLUTO.BODIES AS l1

LEFT JOIN ANDY.PLUTO.BODIES AS l2 ON l1.AROUNDPLANET_PLANET = l2.id

WHERE

l1.BODYTYPE IN ('Star', 'Planet', 'Dwarf Planet', 'Moon')

For small our bodies the transformation is analogous, however I additionally grouped the objects by their distance from the Solar, making it simpler to plot on a chart.

choose

ID,

trim(coalesce(NAME, FULL_NAME)) as NAME,

iff(NEO = 'Y', true, false) as NEO,

iff(PHA = 'Y', true, false) as PHA,

try_to_decimal(GM, 10, 5) / SQUARE(DIAMETER / 2) * 1000 as G,

try_to_decimal(DIAMETER, 10, 5) / 2 as MEAN_RADIUS,

try_to_decimal(A, 10, 5) as SEMIMAJOR_ORBIT,

iff(SUBSTR(KIND, 1, 1) = 'a', 'Asteroid', 'Comet') as TYPE,

CASE

WHEN SEMIMAJOR_ORBIT < 2.0 THEN '0.0-2.0'

WHEN SEMIMAJOR_ORBIT < 2.1 THEN '2.0-2.1'

WHEN SEMIMAJOR_ORBIT < 2.2 THEN '2.1-2.2'

WHEN SEMIMAJOR_ORBIT < 2.3 THEN '2.2-2.3'

WHEN SEMIMAJOR_ORBIT < 2.4 THEN '2.3-2.4'

WHEN SEMIMAJOR_ORBIT < 2.5 THEN '2.4-2.5'

WHEN SEMIMAJOR_ORBIT < 2.6 THEN '2.5-2.6'

WHEN SEMIMAJOR_ORBIT < 2.7 THEN '2.6-2.7'

WHEN SEMIMAJOR_ORBIT < 2.8 THEN '2.7-2.8'

WHEN SEMIMAJOR_ORBIT < 2.9 THEN '2.8-2.9'

WHEN SEMIMAJOR_ORBIT < 3.0 THEN '2.9-3.0'

WHEN SEMIMAJOR_ORBIT < 3.1 THEN '3.0-3.1'

WHEN SEMIMAJOR_ORBIT < 3.2 THEN '3.1-3.2'

WHEN SEMIMAJOR_ORBIT < 3.3 THEN '3.2-3.3'

WHEN SEMIMAJOR_ORBIT < 3.4 THEN '3.3-3.4'

WHEN SEMIMAJOR_ORBIT < 3.5 THEN '3.4-3.5'

ELSE '3.5+'

END AS DISTANCE_SPREAD

from ANDY.PLUTO.SBDB

A few of these transformations may simply be achieved with MAQL – GoodData’s metric defining language. However since we’re going to materialize reworked knowledge right into a separate desk within the database, we could as properly do it with dbt.

As soon as once more, working transformation takes a single command:

meltano --environment dev run dbt-snowflake:run

Analytical layer

For starters, the GoodData server must know the way to hook up with the database. This arrange is simple with the Python SDK – merely learn Meltano’s connection config and create a corresponding knowledge supply on the server. This job should be executed each time there’s an replace within the database setup.

# Parse args

parser = ArgumentParser()

parser.add_argument('-p', '--profile', assist='Profile title', default="dev")

args = parser.parse_args()

# Load GD config and instantiate SDK

config = GDConfig.load(Path('gooddata.yaml'), args.profile)

sdk = GoodDataSdk.create(config.host, config.token)

# Load datasource particulars

snowflake_password = get_variable('.env', 'TARGET_SNOWFLAKE_PASSWORD')

with open('meltano.yml') as f:

meltano_config = yaml.safe_load(f.learn())

snowflake_config = subsequent(x for x in meltano_config['plugins']['loaders'] if x['name'] == 'target-snowflake')['config']

# Deploy the datasource

data_source = CatalogDataSourceSnowflake(

# Fill in properties from snowflake_config and config

)

sdk.catalog_data_source.create_or_update_data_source(data_source)

Subsequent, we have to notify the GoodData server when there may be new knowledge, so it may clear cache and cargo new knowledge for customers. This may also be carried out with Python SDK.

# Parse args

parser = ArgumentParser()

parser.add_argument('-p', '--profile', assist='Profile title', default="dev")

args = parser.parse_args()

# Load GD config and instantiate SDK

config = GDConfig.load(Path('gooddata.yaml'), args.profile)

sdk = GoodDataSdk.create(config.host, config.token)

# Clear the cache

sdk.catalog_data_source.register_upload_notification(config.data_source_id)

Lastly, we have to outline the analytical layer: logical knowledge mannequin, metrics, insights and dashboards – all carried out with GoodData for VS Code. For instance, right here is how a easy donut chart would possibly seem like in our syntax:

id: neo

kind: donut_chart

title: NEO (Close to Earth Objects)

question:

fields:

small_body_count:

alias: Small Physique Depend

title: Depend of Small Physique

aggregation: COUNT

utilizing: attribute/small_body

neo: label/small_body_neo

metrics:

- discipline: small_body_count

format: "#,##0"

view_by:

- neo

In the actual world, you would possibly need to leverage the Python SDK for all of the heavy lifting. Think about you’d need to distribute analytics to a number of prospects with knowledge filters utilized per tenant. Then you definately’d begin with the GoodData for VS Code as a template undertaking to develop the core of your analytics and personalize it per buyer with Python SDK as a part of your CI/CD pipeline.

We’ve not too long ago launched a brand new Python package deal – gooddata-dbt – and it may do much more for you. For instance, it mechanically generates logical knowledge fashions based mostly on dbt fashions. We’ll positively look into interoperability between gooddata-dbt and GoodData for VS Code sooner or later, however for now be happy to take a look at an final knowledge pipeline blueprint from my colleague Jan Soubusta.

To deploy the analytics you’ll must run a couple of instructions:

# To create or replace the info supply

python ./scripts/deploy.py --profile dev

# To clear DB cache

python ./scripts/clear_cache.py --profile dev

# To deploy analytics

gd deploy -- profile dev

Public demo

The demo is accessible publicly, for everybody to play with. Basically, GoodData doesn’t help public dashboards with none authentication. As a workaround, I’m utilizing a proxy that opens a subset of our REST API to the general public and in addition does some caching and price limiting alongside the best way to keep away from potential abuse.

I’m utilizing GitHub pages, to host the online web page. It’s by far the best approach to host any static content material on the internet – simply put what you want in the `/docs` folder of your repo and allow the internet hosting within the repo settings.

To embed my dashboards right into a static HTML web page I’m utilizing GoodData’s WebComponents.

<!-- Load the script with elements -->

<script kind="module" src="https://public-examples.gooddata.com/elements/pluto.js?auth=sso"></script>

<!-- Embed the dashboard -->

<gd-dashboard dashboard="pluto" readonly></gd-dashboard>

Simply two traces of code. Fairly neat, proper?

The pipeline

With my code on GitHub, it’s solely pure to make use of GitHub Actions for CI/CD and knowledge pipelines.

It’s usually not a terrific concept to mix knowledge pipeline and CI/CD deployment right into a single pipeline – it may be lengthy and useful resource intensive.. Ideally, you need to run it as a response to the modifications within the ETL (Extract, Rework, Load) configuration or knowledge within the knowledge supply.

The CI/CD pipeline ought to run each time there are modifications within the analytics-related recordsdata. In the actual world, it’s actually doable to have a sensible pipeline that runs completely different duties relying on which folder was up to date, however for sake of simplicity I’ve configured a set of duties with guide triggers.

Whereas it’s already doable to construct a stable pipeline for an Analytics as Code undertaking utilizing GoodData instruments, we will nonetheless do much more to simplify the info pipeline setup and upkeep.

Listed below are a couple of enhancements we may doubtlessly do:

- Use

python-dbtlibrary to mechanically replace logical knowledge fashions each time dbt fashions are up to date. - Construct a Meltano plugin, making it simpler to invoke GoodData deployment as part of your knowledge pipeline.

- Construct an integration between Python SDK and GoodData for VS Code, enabling Python SDK to load and manipulate the analytical undertaking instantly from YAML recordsdata.

What do you assume could be one of the best subsequent step?

As at all times, I’m keen to listen to what you concentrate on the path we’re taking with Analytics as Code. Be at liberty to succeed in out to me on GoodData neighborhood Slack channel.

Wish to attempt it out for your self? Listed below are a couple of helpful hyperlinks to get you began: